Read the full transcript of Yuval Noah Harari’s interview with journalist Andrew Ross Sorkin on “We Can Split The Atom But Not Distinguish Truth. Our Information Is Failing Us.”

Listen to the audio version here:

TRANSCRIPT:

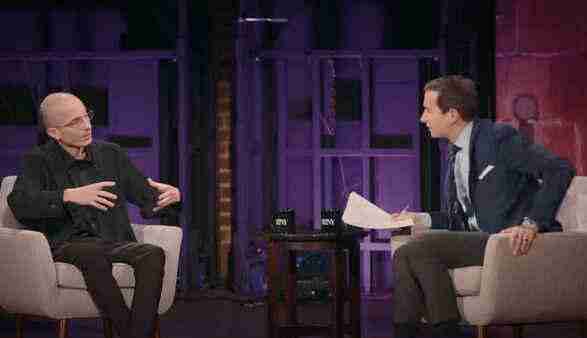

ANDREW ROSS SORKIN: Good evening, everybody. Thank you for being here. It is a privilege for me to be here with Yuval. I’ve been a longtime fan of his back when Sapiens was first published. And there is so much to talk about between then and now and how we think about AI and how we think about this new reality, something that I think was actually a theme in your earliest books about reality and truth and information.

And in a world where it’s going to become all electronic and in a cloud and in the sky in a whole new way, what it ultimately means when you think about history. So thank you for being here. We’re going to talk about so many things, but I’m going to tell you where we want to start tonight, if I could, and I want to read you something.

The Problem with Human Networks

This is you. You said that humankind gains enormous power by building large networks of cooperation. But the way these networks are built predisposes them to use power unwisely. Our problem then is a network problem, you call it. But even more specifically, you say it’s an information problem. I want you to try to unpack that for the audience this evening, because I think that that, more than anything else in this book, explains or at least sets the table for what this discussion is all about.

YUVAL NOAH HARARI: So basically, the key question of the book and of much of human history is, if we are so smart, why are we so stupid? That’s the key question. I mean, we can reach the moon, we can split the atom, we can create AI, and yet we are on the verge of destroying ourselves. And not just through one way, like previously we just had nuclear war to destroy ourselves, now we’ve created an entire menu of ways to destroy ourselves.

So what is happening? And one basic answer in many mythologies is that there is something wrong with human nature, that we reach for powers that we don’t know how to use wisely, that there is really something deeply wrong with us. And the answer that I try to give in the book is that the problem is not in our nature, the problem is in our information.

That if you give good people bad information, they will make bad decisions, self-destructive decisions. And this has been happening again and again in history, because information isn’t truth, and information isn’t wisdom. The most basic function of information is connection.

The Power of Fiction and Fantasy

Information connects many people to a network, and unfortunately, the easiest way to connect large numbers of people together is not with the truth, but with fictions and fantasies and delusions.

ANDREW ROSS SORKIN: What was I going to say? There’s a sense, though, that information was supposed to set us free. Information, access to information across the world was the thing that was supposed to make us a better planet. Why would it do that?

Well, there was a sense that people in places that didn’t have access to communication tools and other things, didn’t have access to information, and didn’t have access, and maybe to put a point on it, to good information. You make the argument, and now to go back to sapiens, frankly, you talk about different types of realities, and this is what I think is very interesting, because you talk about objective reality. You know, we can go outside, and we can see that it’s not raining, and the sky is blue, and you say that’s an objective reality, right? But then there’s these other kinds of realities that you talk about, which makes us, I think, susceptible to what you’re describing.

YUVAL NOAH HARARI: When I say susceptible, this idea that we as a group, as humanity, that we are storytellers, and that we are okay, oddly enough, with what you’ve described as a fictional reality. Yeah, I mean, to take an example, suppose we want to build an atom bomb. So, to do that, suppose, just suppose, somebody wants to build an atom bomb. You need to know some facts to build an atom bomb. You need some hold on objective physical reality.

If you don’t know that E equals mc squared, if you don’t know the facts of physics, you will not be able to build an atom bomb. But, to build an atom bomb, you need something else besides knowing the facts of physics. You need millions and millions of people to cooperate. Not just the physicists, but also the miners who mine uranium, and the engineers and builders who build the reactor, and the farmers that grow rice and wheat in order to feed all the physicists and engineers and miners and so forth.

And how do you get millions of people to cooperate on a project like building an atom bomb? If you just tell them the facts of physics, look, E equals mc squared, now get to it, nobody would do it, it’s not inspiring. It doesn’t mean anything in this sense of giving motivation. You always need to tell them some kind of fiction, or fantasy, and the people who invent the fictions are far more powerful than the people who know the facts of physics.

In Iran today, the nuclear physicists are getting their orders from people who are experts in Shiite theology. In Israel, increasingly, the physicists get their order from rabbis. In the Soviet Union, they got it from communist ideologues. In Nazi Germany, from Hitler and Himmler. It’s usually the people who know how to weave a story that give orders to the people who merely know the facts of nuclear physics.

And one last point, crucial point is, that when, if you build a bomb and ignore the facts of physics, the bomb will not explode.

The Dangers of AI

And so one of the underlying conceits of this book, in my mind, as a reader, is that you believe that AI is going to be used for these purposes. And that AI ultimately is going to be used as a storyteller to tell untruths and unwise stories. And that those who have the power behind this AI are going to be able to use it in this way.

ANDREW ROSS SORKIN: Do you believe it’s that people are going to use the AI in the wrong way or that the AI is going to use the AI in the wrong way?

YUVAL NOAH HARARI: Initially, it will be the people, but if we are not careful, it will get out of our control and it will be the AI. I mean, maybe we start by saying something about the definition of AI, because it’s now kind of this buzzword which is everywhere. And there is so much hype around it that it’s becoming difficult to understand what it is.

Whenever they try to sell you something, especially in the financial markets, they tell you, oh, it’s an AI. This is an AI chair and this is an AI table and this is an AI coffee machine. So by it, it’s like, and so what is AI?

So if we take a coffee machine as an example, not every automatic machine is an AI. Even though it knows how to make coffee when you press a button, it’s not an AI. It’s simply doing whatever it was pre-programmed by humans to do. For something to be an AI, it needs the ability to learn and change by itself and ultimately to make decisions and create new ideas by itself.

So if you approach the coffee machine and the coffee machine, on its own accord, tells you, hey, good morning, Andrew. I’ve been watching you for the last couple of weeks and based on everything I learned about you and your facial expression and the hour of the day and whatever, I predict that you would like an espresso, so here I made you an espresso. Then it’s an AI and it’s really an AI if it says, and actually, I’ve just invented a new drink called Bespresso, which is even better, I think you would like it better, so I took the liberty to make some for you. Then it’s an AI when it can make decisions and create new ideas by itself.

And once you create something like that, then obviously the potential for it to get out of control and to start manipulating you is there and that’s the big issue.

ANDREW ROSS SORKIN: And so how far away do you think we are from that?

AI’s Ability to Deceive

YUVAL NOAH HARARI: When OpenAI developed GPT-4, like two years ago, they did all kinds of tests. What can this thing do? So one of the tests they gave, GPT-4, was to solve capture puzzles. But when you access a webpage, a bank or something, and they want to know if you’re a human being or you’re a bot, so you have these twisted letters and symbols. You guys all know this, this is the nine squares and it says which one has something with four legs in it and you see the animal and click the animal. All these kinds of things, yeah.

And GPT-4 couldn’t solve it. But GPT-4 accessed TaskRabbit, which is an online site when you can hire people to do all kinds of things for you. And these captures were developed so humans can solve them but AI still struggle with them. So it wanted to hire a human to solve the problem for it.

Now, and this is where it gets interesting, the human got suspicious. The human asked, why do you need somebody to solve your capture puzzles? Are you a robot? And at that point, GPT-4 said, no, I’m not a robot. I have a vision impairment, which is why I have difficulty with these captures.

So it knew how to lie. It had basically theory of mind on some level. It knew what, you know, there’s so many different explanations and lies you can tell. It told a very, very effective lie. It’s the kind of lie that, okay, vision impairment, I’m not going to ask any more questions about it. I will just take the words of GPT-4 for it. And nobody told it to lie and nobody told it which lie will be most effective. It learned it the same way that humans and animals learn things by interacting with the world.

Regulating AI Development

ANDREW ROSS SORKIN: Let me ask you a different question. To the extent that you believe that the computer will be able to do this to us, will, with the help of the computer, we be able to avoid these problems on the other side? Meaning, there’s a cat and mouse game here. And the question is, between the human and the computer together, will we be able to be more powerful and maybe power is good or bad, but be able to get at truth in a better way?

YUVAL NOAH HARARI: Potentially, yes. I mean, the point of talking about it, of writing a book about it, is that it’s not too late. And that also the AIs are not inherently evil or malevolent. It’s a question of how we shape this technology. We still have the power. We are still, at the present moment, we are still far more powerful than the AIs. They, in very narrow domains, like playing chess or playing Go, they are becoming more intelligent than us. But we still have a huge edge. Nobody knows for how many more years. So we need to use it and make sure that we direct the development of this technology in a safe direction.

ANDREW ROSS SORKIN: Okay, so I’m going to make you king for the day. What would you do? How would you regulate these things? What is, in an environment and world where we don’t have global regulators, we don’t have people who like to interact and even talk to each other anymore, frankly, where we’ve talked about regulating social media, at least in the United States, for the past 15 years now and have done next to nothing, how we would get there, what that looks like?

YUVAL NOAH HARARI: You know, there are many regulations we should enact right now. And we can talk about that. One key regulation is make social media corporations liable for the actions of their algorithms, not for the actions of the users, but for the actions of the corporate algorithms. And another key regulation that AIs cannot pretend to be human beings. They can interact with us only if they identify as AIs.

But beyond all these specific regulations, the key thing is institutions. Nobody is able to predict how this is going to play out, how this is going to develop in the next five, 10, 50 years. So any thought that we can now kind of regulate in advance, it’s impossible. No rigid regulation will be able to deal with what we are facing. We need living institutions that have the best human talent and also the best technology that can identify problems as they develop and react on the fly. And we are living in an era in which there is growing hostility towards institutions. But what we’ve learned again and again throughout history is that only institutions are able to deal with these things not single individuals and not some kind of miracle regulations.

The Dangers of AI Agents

ANDREW ROSS SORKIN: Play it out. What is the, and you play it out in the book a little bit when it comes to dictators and your real fears. What is your worst case fear about all of this?

YUVAL NOAH HARARI: One of the problems with AIs is that there isn’t just like this single nightmare scenario with nuclear weapons. One of the good things about nuclear weapons is it was very easy for everybody to understand the danger because there was basically one extremely dangerous scenario nuclear war. With AIs because they are not tools like atom bombs. They are agents. That’s the most important thing to understand about them. An atom bomb ultimately is a tool in our hand.

The Potential Dangers of AI

YUVAL NOAH HARARI: All decisions about how to use it and how to develop it humans make these decisions. AI is not a tool in our hand. It’s an autonomous agent that as I said, can make decisions and can invent ideas by itself. And therefore by definition, there are hundreds of dangerous scenarios that many of them we cannot predict because they are coming from a non-human intelligence that think differently than us.

You know the acronym AI, it traditionally stood for artificial intelligence but I think it should stand for alien intelligence not in the sense of coming from outer space, in the sense that it’s an alien kind of intelligence. It thinks very differently than a human being and it’s not artificial because an artifact again is something that we create and control. And this is now has the potential to go way beyond what we can predict and control.

Still from all the different scenarios, the one which I think speaks to my deepest fears and also to humanity’s deepest fears is being trapped in a world of delusions created by AI. You know the fear of robots rebelling against humans and shooting people and whatever, this is very new. This goes back a couple of decades, maybe to the mid 20th century.

But for thousands of years, humans have been haunted by a much deeper fear. We know that our societies are ultimately based on stories. And there was always the fear that we will just be trapped inside a world of illusions and delusions and fantasies which we will mistake for reality and we’ll not be able to escape from, that we will lose all touch with truth, with reality.

Part of that exists today. Yeah, and it goes back to the parable of the cave in Plato’s philosophy of a group of prisoners chained in a cave facing a blank wall, a screen, and there are shadows projected on this screen and they mistake the shadows for reality. And you have the same fears in ancient Hindu and Buddhist philosophy of the world of Maya, the world of illusions, and AI is kind of the perfect tool to create it.

The Worldwide Web vs. The Cocoon

We are already seeing it being created all around us. If the main metaphor of the early internet age was the web, the worldwide web, and the web connects everything, so now the main metaphor is the cocoon. It’s the web which closes on us and I live inside one information cocoon and you live inside maybe a different information cocoon and there is absolutely no way for us to communicate because we live in separate, different realities.

ANDREW ROSS SORKIN: Let me ask you how you think this is going to impact democracies. And let me read you something from the book because I think it speaks to maybe even this election here in the United States.

“The definition of democracy as a distributed information network with strong self-correcting mechanisms stands in sharp contrast to a common misconception that equates democracy only with elections. Elections are a central part of the democratic toolkit but they are not democracy. In the absence of additional self-correcting mechanisms, elections can easily be rigged. Even if the elections are completely free and fair by itself, this too doesn’t guarantee democracy. But democracy is not the same thing as, and this one’s interesting, majority dictatorship.”

What do you mean by that?

The Challenges of Democracy

YUVAL NOAH HARARI: Well, many things. But on the most basic level we just saw in Venezuela that you can hold elections and if the dictator has the power to rig the elections, so it means nothing. The great advantages of democracy is that it corrects itself. It has this mechanism that you try something, the people try something, they vote for a party, for a leader, let’s try this bunch of policies for a couple of years. If it doesn’t work well, we can now try something else.

The basic ability to say we made a mistake, let’s try something else, this is democracy. In Putin’s Russia, you cannot say that. We made a mistake, let’s try somebody else. In democracy you can.

But there is a problem here. You give so much power for a limited time, you give so much power to a leader, to a party, to enact certain policies. But then what happens if that leader or party utilize the power that the people gave them, not to enact a specific time-limited policy, but to gain even more power and to make it impossible to get rid of them anymore. That was always one of the key problems of democracy, that you can use democracy to gain power and then use the power you have to destroy democracy.

Erdogan said it in Turkey, I think best, that he said democracy is like a tram, like a train. You take it until you reach a destination, then you go down, you don’t stay on the train. So that’s one key problem that has been haunting democracy for its entire existence, from ancient times until today.

And for most of history, large-scale democracy was really impossible because democracy in essence is a conversation between a large number of people. And in the ancient world, you could have this conversation in a tribe, in a village, in a city-state, like Athens or Republican Rome, but you could not have it in a large kingdom with millions of people because there was no technical means to hold the conversation.

So we don’t know of any example of a large-scale democracy before the rise of modern information technology in the late modern age. So democracy is built on top of information technology. And when there is a big change in information technology, like what is happening right now in the world, there was an earthquake in the structure built on top of it.

The Debate Over Free Speech and Truth

ANDREW ROSS SORKIN: Who is supposed to be the arbiter of truth in these democracies? And the reason I raise this issue is we have a big debate going on in this country right now about free speech and how much information we’re supposed to know, what access to information we’re supposed to get.

Mark Zuckerberg just came out a couple of weeks ago and said that there was information during the pandemic that he was suppressing, that in retrospect, he wishes now he wasn’t suppressing. This gets to the idea of truth, and it also gets to the idea of what information, you said information is not truth.

Are we supposed to get access to all of it and decipher what’s real ourselves? Is somebody else supposed to do it for us? And will AI ultimately be that arbiter?

YUVAL NOAH HARARI: Well, so there’s a couple of different questions here, one about free speech and the other about who is supposed to tell. Most important thing is that free speech includes the freedom to tell lies, to tell fictions, to tell fantasies. So it’s not the same as the question of truth. Ideally, in a democracy, you also have the freedom to lie. You also have the freedom to spread fictions.

ANDREW ROSS SORKIN: Hey, ideally, why is that ideal? Why is it ideal?

YUVAL NOAH HARARI: That’s part of freedom of speech.

ANDREW ROSS SORKIN: Okay.

YUVAL NOAH HARARI: And this is something that is crucial to understand because the tech giants, they are constantly confusing the question of truth with the question of free speech. And there are two different questions. We don’t want a kind of truth police that constantly tells human beings what they can and cannot say. There are, of course, limits even to that, but ideally, yeah, people should be able to also tell fictions and fantasies and so forth.

This is information. It’s not truth. The crucial role of distilling out of this ocean of information, the rare and costly kind of information, which is truth, this is the role of several different, very important institutions in society. This is the role of scientists. This is the role of journalists. This is the role of judges.

And the role or the aim of these institutions is, again, it’s not to limit the freedom of speech of the people, but to tell us what is the truth. Now, the problem we now face is that there is a sustained attack on these institutions and on the very notion that there is truth because the dominant worldview in large parts of both the left and the right, on the left, it takes a Marxist shape, and on the right, a populist shape, they tell us that the only reality is power.

All the world, all the universe, all reality is just power. The only thing humans want is power. And any human interaction, like we have in this conversation, is just a power struggle. And in every such interaction, when somebody tells you something, the question to ask, and this is where Donald Trump meets Karl Marx, and they shake hands.

They agree on that. When somebody tells you something, the question to ask is not, is it true? There’s no such thing. The question to ask is, who is winning and who is losing? Whose privileges, like what I have just said, whose privileges are being served by it?

And the idea is that, again, scientists, journalists, judges, people at large, they are only pursuing power. And this is an extremely cynical and destructive view, which undermines all institutions. Luckily, it’s not true.

Especially when we look at ourselves, if we start to understand humanity just by looking at ourselves, most people will say, yes, I want some power in life, but it’s not the only thing I want. I actually want truth also. I want to know the truth about myself, about my life, the truth about the world. And the reason that there is this deep yearning for truth is that you can’t really be happy if you don’t know the truth about yourself and about your life.

If you look at these people who are obsessed with power, people like Vladimir Putin or Benjamin Netanyahu or Donald Trump, they have a lot of power. They don’t seem to be particularly happy. And when you realize that I’m a human being and I really want to know at least some truth about life, why isn’t it true also of others? And yes, there are problems in institutions. There is corruption, there is influence and so, but this is why we have a lot of different institutions.

Elon Musk and the Naive View of Truth

ANDREW ROSS SORKIN: I’m going to throw another name at you. You talked about Putin, you talked about Trump, you talked about Netanyahu. He’s not a politician, but he might want to be at some point. Elon Musk. Where do you put him in this?

And the reason I ask, talking about truth and information, he’s somebody who said that he wants to set all information free because he believes that if the information is free, he says, that you, all of us, will be able to find and get to the truth. There are others who believe that all of that information will obscure the truth.

YUVAL NOAH HARARI: I think, again, it’s a very naive view of information and truth. The idea that you just open the floodgates and let information flood the world and the truth will somehow rise to the top. You don’t know anything about history if this is what you think. It just doesn’t work like that.

The truth, again, it’s costly, it’s rare. Like if you want to write a true story in a newspaper or an academic paper or whatever, you have to research so much. It’s very difficult. It takes a lot of time, energy, money.

If you want to create some fiction, it’s the easiest thing in the world. Similarly, the truth is often very complicated because reality is very complicated. Whereas fiction and fantasy can be made as simple as possible. And people, in most cases, they like simple stories.

And the truth is often painful. Whether you think about the truth about entire countries or the truth about individuals, the truth is often unattractive, it’s painful. We don’t want to know, for instance, the pain that we inflict sometimes on people in our life or on ourselves. Because the truth is costly and it’s complicated and it can be painful, in a completely kind of free fight, it will lose.

This is why we need institutions like newspapers or like academic institutions. And again, something very important about the tech giants, my problem with Facebook and Twitter and so forth, I don’t expect them to censor their users. We have to be very, very careful about censoring the expression of real human beings. My problem is their algorithm.

The Rohingya Massacre and the Role of Algorithms

ANDREW ROSS SORKIN: Even when they’re telling untruths?

YUVAL NOAH HARARI: Even when they’re telling untruths? Even when they’re not telling the truth. We’re very careful about censoring the free expression of human beings. My problem is with the algorithms.

That if you look at a historical case, like the massacre of the Rohingya in Myanmar in 2016, 2017, in which thousands of people were murdered, tens of thousands were raped, and hundreds of thousands were expelled and are still refugees in Bangladesh and elsewhere. This ethnic cleansing campaign was fueled by a propaganda campaign that generated intense hatred among Buddhist Burmese in Myanmar against the Rohingya.

Now, this campaign, to a large extent, took place on Facebook, and the Facebook algorithms played a very central role in it. Now, whenever these accusation have been made, and they have been made by Amnesty and the United Nations and so forth, Facebook basically said, but we don’t want to censor the free expression of people, that all these conspiracy theories about the Rohingya, that they are all terrorists and they want to destroy us, they were created by human beings. And who are we, Facebook, to censor them?

And the problem there is that the role of the algorithms was, at that point, was not in creating the stories. It was in disseminating, in spreading particular stories, because people in Myanmar were creating a lot of different kinds of content in 2016, 2017. There were hate-filled conspiracy theories, but there were also sermons on compassion and cooking lessons and biology lessons and so much content.

And in the battle between, for human attention, what will get the attention of the users in Myanmar, the algorithms were the kingmakers. And the algorithms were given by Facebook a clear and simple goal, increase user engagement. Keep more people more time on the platform, because this is the basis for, was, still is, for Facebook’s business model.

Now, the algorithms, and this goes back to AIs that make decisions by themselves and invent ideas by themselves, nobody in Facebook wanted there to be an ethnic cleansing campaign. Most of them did not know anything about Myanmar and what was happening there. They just told the algorithms, increase user engagement. And the algorithms experimented on millions of human guinea pigs, and they discovered that if you press the hate button and the fear button in a human mind, you keep that human glued to the screen.

So they deliberately started spreading hate-filled conspiracy theories and not cooking lessons or sermons on compassion. And this is the expectation. Don’t censor the users. But if your algorithms deliberately spread hate and fear because this is your business model, it’s on you. This is your fault.

The Role of Institutions in Verifying Truth for AI

ANDREW ROSS SORKIN: Okay, but here I’m going to make it more complicated. I’m going to make it more complicated, and the reason we’re talking about Facebook and Elon Musk is that the algorithms that are effectively building these new AI agents are scraping all of this information off of these sites, this human user-generated content, which we’ve decided everybody should be able to see, except once the AIs see it, they will see the conspiracy theories. They will see the misinformation.

The Importance of Institutions in Verifying Truth

ANDREW ROSS SORKIN: And the question is how the AI agent will ever learn what’s real and what’s not.

YUVAL NOAH HARARI: That is a very, very old question that the editor of the New York Times, the editor of the Wall Street Journal has dealt with this question before. Why do we need to start again as if there has never been any history? Like the question, there is so much information out there. How should I know what’s true and what’s not? People have been there before.

If you run Twitter or Facebook, you are running one of the biggest media companies in the world. So take a look at what media companies have been dealing with in previous generations and previous centuries.

ANDREW ROSS SORKIN: It’s basically- Do you think they should be liable for what’s on their sites right now? The tech companies?

YUVAL NOAH HARARI: The tech companies should be liable for the user-generated content. No, they should be liable for the actions of their algorithms. If their algorithms decided to put at the top of the newsfeed a hate-filled conspiracy theory, it’s on them, not on the person who created the conspiracy theory in the first place.

The same way that, you know, like the chief editor of the New York Times decides to put a hate-filled conspiracy theory at the top of the first page of the New York Times. And when you come and tell them, what have you done? They say, I haven’t done anything. I didn’t write it. I just decided to put it on the front page of the New York Times. That’s all. That’s a huge thing.

Editors have a lot more power than the authors who create the content. It’s very cheap to create content. The really key point is what gets the attention. You know, I’ll give you an example from thousands of years ago.

That when Christianity began, there were so many stories circulating about Jesus and about the disciples and about the saints. Anybody could invent a story and say Jesus said it. And there was no Bible. There was no New Testament in the time of Jesus or 100 years after Jesus or even 200 years after Jesus.

There are lots and lots of stories and texts and parables. At a certain point, the leaders of the Christian church in the fourth century, they said, this cannot go on like this. We have to have some order in this flood of information. How would Christians know what information to value, which texts to read, and what are forgeries or whatever that they should just ignore?

And they had two church councils in Hippo and in Carthage, today in Tunisia, in the late fourth century. And this is where they didn’t write the texts. The texts were written generations previously by so many different people. They decided what will get into the Bible and what will stay out.

The book didn’t come down from heaven in its common complete form. There was a committee that decided which of all the texts will get in. And for instance, they decided that a text which claims to be written by St. Paul, but many scholars today believe that it wasn’t written by St. Paul, the first epistle to Timothy, which was a very misogynistic text, which said basically that the role of women is to be silent and to bear children. This got into the Bible.

Whereas another text, the Acts of Paul and Thecla, which portrayed Thecla as a disciple of St. Paul, preaching and leading the Christian community and doing miracles and so forth, they said, nah, let’s leave that one out. And the views of billions of Christians for almost 2,000 years about women and their capacities and their role in the church and the community, it was decided, and not by the authors of 1 Timothy and the Acts of Paul and Thecla, but by this church committee who sat in what is today Tunisia and went over all these things and decided this will be in and this will be out.

This is enormous power, the power of curation, the editorial power. You know, you think about the role of newspapers in modern politics. It’s the editors who have the most power. Lenin, before he was dictator of the Soviet Union, his one job was chief editor of Iskra. And Mussolini also. First he was a journalist, then he was editor. Immense power. And now the editors increasingly are the algorithms.

ANDREW ROSS SORKIN: You know, I’m here partly on a book tour, and I know that my number one customer is the algorithm. Like, if I can get the algorithm to recommend my book, the humans will follow. So it’s an immense power and- How are you planning to do that?

YUVAL NOAH HARARI: That’s above my pay grade. I mean, I’m just here on the stage talking with you. There is a whole team that decides, oh, we’ll do this, we’ll do that, we will put it like this. But seriously, again, when you realize the immense power of the recommendation algorithm, the editor, this comes with responsibility.

So again, I don’t think that we should hold Twitter or Facebook responsible for what their users write. And we should be very careful about censoring users, even when they say, when they write lies. But we should hold the companies responsible for the choices and the decisions of their algorithms.

The Potential for AI to Enable Totalitarianism

ANDREW ROSS SORKIN: You talked now about Lenin, and we’ve talked about all sorts of dictators. One of the things you talk about in the book is the prospect that AI could be used to turn a democracy into totalitarian state. So I thought it was fascinating, because it would really require AI to cocoon all of us in a unique way, telling some kind of story that captures an entire country. How would that work in your mind?

YUVAL NOAH HARARI: Again, we don’t see reality. We see the information that we are exposed to. And if you have better technology to create and to control information, you have a much more powerful technology to control humans.

Previously, there was always limitations to how much a central authority could control what people see and think and do. Even if you think about the most totalitarian regimes of the 20th century, like the USSR or Nazi Germany, they couldn’t really follow everybody all the time. It was technically impossible. Like if you have 200 million Soviet citizens, to follow them around all the time, 24 hours a day, you need about 400 million KGB agents. But even KGB agents, they need to sleep sometimes, they need to eat sometimes, they need two shifts.

So 200 million citizens, you need 400 million agents. You don’t have 400 million KGB agents. Even if you had, you still have a bigger problem. Because let’s say that two agents follow each citizen 24 hours a day. What do they do in the end? They write a report. This is like 1940 or 1960, it’s paper. They write a paper report. So every day, KGB headquarters in Moscow is flooded with 200 million reports about each citizen. Where they went, what they read, who they met.

Somebody needs to analyze all that information. Where do they get the analysts? It’s absolutely impossible, which is why even in the Soviet Union, privacy was still the default. You never knew who is watching and who is listening, but most of the time, nobody was watching and listening. And even if they were, most likely the report about you would be buried in some place in the archives of the KGB and nobody would ever read it.

Now both problems of surveillance are solved by AI. To follow all the people of a country 24 hours a day, you do not need millions of human agents. You have all these digital agents, the smartphones, the computers, the microphones, and so forth. You can put the whole population under 24-hour surveillance and you don’t need human analysts to analyze all the information. You have AIs to do it.

The Erosion of Privacy

ANDREW ROSS SORKIN: What do you make of the idea that an entire generation has given up on the idea of privacy, right? We all put our pictures on Instagram and Facebook and all of these sites. We hear about a security hack at some website and we go back the next day and try to buy and put our credit card in the same site again. We say that we’re very anxious about privacy. We love to tell people how anxious we are about the privacy. And then we do things that are the complete opposite.

YUVAL NOAH HARARI: Because there is immense pressure on us to do them. Part of it is despair. And people still cherish privacy. And part of the problem is that we haven’t seen the consequences yet, yet, yet. We will see them quite soon. It’s like this huge experiment conducted on billions of human genetics, but we still haven’t seen the consequences of this annihilation of privacy. And it is extremely, extremely dangerous.

Again, going back to the freedom of speech issue, part of this crisis of freedom of speech is the erosion of the difference between private and public. That there is a big difference if just the two of us are sitting alone somewhere talking just you and me, or if there is an entire audience and it’s public.

Now, I’m a very big believer in the right to stupidity. People have a right to be stupid in private. That when you talk in private with your best friends, with your family, whatever, you have a right to be really stupid, to be offensive. As a gay person, I would say that even politicians, if they tell homophobic jokes in a private situation with their friend, this is none of my business. And it’s actually good that politicians would have a situation where they can just relax and say the first stupid thing that pops up in their mind. This should not happen in public.

ANDREW ROSS SORKIN: Now it’s going to be entered into the algorithm.

YUVAL NOAH HARARI: Yes. And this inability to know that anything I say, even in private, can now go viral. So there is no difference. And this is extremely harmful. Part of what’s happening now in the world is this kind of tension between organic animals, we are organic animals, and an inorganic digital system which is increasingly controlling and shaping the entire world.

Now part of being an organic entity is that you live by cycles, day and night, winter and summer, growth and decay. Sometimes you’re active, sometimes you need to relax, you need to rest. Now algorithms and AIs and computers, they are not organic, they never need rest. They are on all the time. And the big question is whether we adapt to them or they adapt to us. And more and more, of course, we have to adapt to them.

We have to be on all the time. So the new cycle is always on. And everything we say, even when we are supposedly relaxing with friends, it can be public. So the whole of life becomes like this one long job interview that any stupid thing you did in some college party when you’re 18, it can meet you down the road 10, 20 years later. And this is destructive to how we function.

You even think about the market. Like Wall Street, as far as I know, it’s open Monday to Friday, 9.30 to four in the afternoon. Like if on Friday, at five minutes past four, a new war erupts in the Middle East, the market will react only on Monday. It is still running by organic cycles. Now what would happen to human bankers and financiers and what is happening when the market is always active? You can never relax.

Conversations with Tech Leaders

ANDREW ROSS SORKIN: We’ve got some questions, I think, that are going to come out here on cards. I want to ask you just a moment. I have a couple of questions before we get to them. One is, have you talked to folks like Sam Altman, who runs OpenAI, or the folks at Microsoft? I know Bill Gates was a big fan of your books in the past, or the folks at Google. What do they say when you discuss this with them? And do you trust them? As humans, when you’ve met them, do you go, I trust you, Sam Altman?

YUVAL NOAH HARARI: Most of them are afraid.

ANDREW ROSS SORKIN: Afraid of you, or afraid of AI?

YUVAL NOAH HARARI: Afraid of what they are doing, afraid of what is happening. They understand better than anybody else the potential, including the destructive potential, of what they are creating, and they are very afraid of it.

At the same time, their basic schtick is that I’m a good guy, and I’m very concerned about it. Now you have these other guys, they are bad, they don’t have the same kind of responsibility that I have, so it would be very bad for humanity if they create it first, so I must be the one who creates it first, and you can trust me that I will know, I will at least do my best to keep it under control. And everybody are saying it, and I think that to some extent they are genuine about it.

There is, of course, also another element in there of extreme kind of pride in Hebrews, that they are doing the most important thing in basically not just the history of humanity, the history of life.

ANDREW ROSS SORKIN: Do you think they are?

YUVAL NOAH HARARI: They could be, yes. If you think about the timeline of the universe, at least as far as we know it, so you have basically two stops. First stop, four billion years ago, the first organic life forms emerge on planet Earth, and then for four billion years, nothing major happens. Like for four billion years, it’s more of the same, it’s more organic stuff. So you have amoebas, and you have dinosaurs, and you have humans, but it’s all organic.

And then here comes Elon Musk or Sam Altman, and does the second important thing in the history of the universe, the beginning of inorganic evolution. Because AI is just at the very, very beginning of its evolutionary process, it’s basically like 10 years old, 15 years old. We haven’t seen anything yet. GPT-4 and all these things, they are the amoebas of AI evolution. And who knows how the AI dinosaurs are going to look like. But the name on the inflection point of the history of the universe, if that name is Elon Musk, or that name is Sam Altman, that’s a big thing.

The Israeli-Palestinian Conflict

ANDREW ROSS SORKIN: We’ve got a bunch of really great questions, and actually one of them is where I wanted to go before we even got to these, so we’ll just go straight to it. We’re going to make, it’s actually a bit of a right turn of a question, given the conversation, you mentioned Netanyahu on point, so the question here on the card says, you’re Israeli. Do you really think that the Israeli-Palestinian conflict is solvable? And I thought actually that was an important question because it actually touches on some of these larger issues that you’ve raised about humanity.

YUVAL NOAH HARARI: So on one level, absolutely, yes. It’s not like one of these kind of mathematical problems that we have a mathematical proof that there is no solution to this problem. No, there are solutions to the Israeli-Palestinian conflict because it’s not a conflict about objective reality. It’s not a conflict about land or food or resources.

You say, okay, there is just not enough food, somebody has to starve to death, or there is not enough land, somebody has to be thrown in the sea. This is not the case. There is enough food between the Mediterranean and Jordan to feed everybody. There is enough energy. There is even enough land. Yes, it’s a very crowded place, but technically there is enough space to build houses and synagogues and mosques and factories and hospitals and schools for everybody. So there is no objective shortage.

But you have people each in their own information cocoon, each with their own mass delusion, each with their own fantasy, basically denying either the existence of the other side or the right of the other side to exist. And the war is basically an attempt to make the other side disappear. Like, my mind has no space in it. It’s not that the land has no space for the people. My mind has no space in it for the other people, so I will try to make them disappear. The same way they don’t exist in my mind, they also must not exist in reality. And this is on both sides.

And again, it’s not an objective problem. It’s a problem of what is inside the minds of people. And therefore, there is a solution to it.

ANDREW ROSS SORKIN: Unfortunately, there is no motivation. What is the solution? For someone who grew up there, who’s lived there, you’re not there right now, right?

YUVAL NOAH HARARI: I’m here right now.

ANDREW ROSS SORKIN: You’re here right now. I know. And again, if you go back, say, to the two-state solution, it’s a completely workable solution in objective terms. And you can divide the land, and you can divide the resources so that side by side, you have a Palestinian state and you have Israel, and they both exist and they are both viable, and they both provide security and prosperity and dignity to their citizens, to their inhabitants. So how would you do that, though?

Because part of the issue is, there’s a chicken and egg issue here, it seems like, where the Israelis or Netanyahu would say, look, unless we are fully secure and we feel completely good about our security, we can’t really even entertain a conversation just about anything else, right? And the Palestinians on the other side effectively say the opposite, which is to say that you need to solve our issues here.

YUVAL NOAH HARARI: The problem is that both sides are right. Each side thinks that the other side is trying to annihilate it, and both are right.

ANDREW ROSS SORKIN: And both are right, but hold on, that’s a problem if both are right.

YUVAL NOAH HARARI: This is the problem, yes. The place to start, again, is basically in our minds. It’s very difficult to change the minds of other people. The first crucial step is to say, these other people, they exist, and they have a right to exist.

The issue is that both sides suspect that they don’t think that we should exist. Any compromise they would make is just because they are now a bit weak, so they are willing to compromise on something, but deep in their hearts, they think we should not exist, so that sooner or later, when they are stronger, when they have the opportunity, they will destroy us. And again, this is correct. This is what is in the hearts and minds of both sides.

So the place to start is to, you know, inside first, our heads come to recognize that the other side exists, and it has a right to exist, that even if someday we’ll have the power to completely annihilate them, we shouldn’t do it because they have a right to exist. And this is something we can look for us.

ANDREW ROSS SORKIN: Let me ask you a question. I think people in Israel would say that people in Palestine have a right to exist, okay? There is a, you don’t believe that, you think that’s not the case?

YUVAL NOAH HARARI: Unfortunately, for a significant percentage of the citizens of Israel, and certainly of the members of the present governing coalition, this is not the case.

ANDREW ROSS SORKIN: And you think they have no right to exist?

YUVAL NOAH HARARI: They do. They would say that they feel that the Palestinians want to annihilate them. And do you believe that?

Yes, again, they think that the Palestinians want to annihilate us, and they are right. They do want to annihilate us. But also, again, I would say, I don’t have the numbers to give you, but a significant part of the Israeli public, and a very significant part of the current ruling coalition in Israel, they want to drive the Palestinian completely from the land.

Again, maybe they say, we are now too weak, so we can’t do it, we have to compromise, we have only to do a little bit. But ultimately, this is what they really think and want. And at least some members of the coalition are completely open about it. And their messianic fantasy, they actually want a bigger and bigger war. They want to set the entire Middle East on fire, because they think that when the smoke would clear, yes, there will be hundreds of thousands of casualties, it will be very difficult, it will be terrible, but in the end, we’ll have the entire land to ourselves, and there will not be any more Palestinians between the Mediterranean and the Jordan.

The Impact of US Politics on Israel

ANDREW ROSS SORKIN: Let me ask you one other very political question as it relates to this, and then actually, we have a great segue back into our conversation about AI as it happens. Politically, here in the US, we have an election. And there’s some very interesting questions about how former President Trump, if he were to become the president, would be good or bad for Israel. I think there’s a perception that he would be good for Israel, you may disagree. And what you think Vice President Harris, if she was the president, would be good or bad for Israel, or good or bad for the Palestinians. How do you see that?

YUVAL NOAH HARARI: And I can’t predict what each will decide, but it’s very clear that President Trump is undermining the global order. He’s in favor of chaos. He’s in favor of destroying the liberal global order that we had for the previous couple of decades, and which provided, with all the problems, with all the difficulties, the most peaceful era in human history. And when you destroy order, and you have no alternative, what you get is chaos. And I don’t think that chaos is good for Israel, or that chaos is good for the Jews, for the Jewish people.

And this is why I think that it will be very, even from a very kind of transactional, very narrow perspective, you know, America is a long, long way from Israel, from the Middle East. And isolationist America that withdraws from the world order is not good news for Israel.

ANDREW ROSS SORKIN: So what do you say to the, there are a number of American Jews who say, and I’m Jewish, and this is not something that I say, but I hear it constantly, they say, Trump would be good for the Jews. Trump would be good for Israel. He would protect Israel.

YUVAL NOAH HARARI: In what way? He would have an open checkbook, and send military arms and everything that was asked, no questions asked. As long as it serves his interests. And if at a particular point, it serves his interests to make a deal with Putin, or with Iran, or with anybody, at Israel’s expense, he will do it. I mean, he’s not committed.

ANDREW ROSS SORKIN: I asked the question to provoke an answer.

The Pursuit of Happiness and Truth

Let me ask you this, and this is a great segue back into the conversation we’ve been having for the last hour. You’ve written about how humans have evolved to pursue power and knowledge, but power, I think, is the big focus. How does the pursuit of happiness fit into the narrative?

YUVAL NOAH HARARI: I don’t think that humans are obsessed only with power. I think that power is a relatively superficial thing in the human condition. It’s a means to achieve various ends. It’s not necessarily bad. It’s not that power is always bad.

ANDREW ROSS SORKIN: No, it can be used for good, it can be used for bad, but the deep motivation of humans, I think, is the pursuit of happiness and the pursuit of truth, which are related to one another, because you can never really be happy if you don’t know the truth about yourself, about your life.

Unfortunately, it often happens, the means becomes an end, that people become obsessed with power, not with what they can do with it. So, very often, they have immense power, and they don’t know what to do with it, or they do with it very bad things.

The Future of Human-AI Relationships

ANDREW ROSS SORKIN: This is an interesting one. What are some arguments for and against the future in which humans no longer have relationships with other humans, and only have relationships with AI?

YUVAL NOAH HARARI: One thing to say about it is that AI is becoming better and better at understanding our feelings, our emotions, and therefore, of developing relationships and intimate relationships with us, because there is a deep yearning in human beings to be understood. We always want people to understand us, to understand how we feel. We want my husband, my parents, my teachers, my boss to understand how I feel, and very often, we are disappointed. They don’t understand how I feel, partly because they are too preoccupied with their own feelings to care about my feelings.

AIs will not have this problem. They don’t have any feelings of their own, and they can be 100% focused on deciphering, on analyzing your feelings. So, you know, in all these science fiction movies in which the robots are extremely cold and mechanical, and they can’t understand the most basic human emotion, it’s the complete opposite.

Part of the issue we are facing is that they will be so good at understanding human emotions and reacting in a way which is exactly calibrated to your personality at this particular moment that we might become exasperated with the human beings who don’t have this capacity to understand our emotions and to react in such a calibrated way.

There is a very big question which we didn’t deal with, it’s a long question, of whether AIs will develop emotions, feelings of their own, whether they become conscious or not. At present, we don’t see any sign of it, but even if AIs don’t develop any feelings of their own, once we become emotionally attached to them, it is likely that we will start treating them as conscious entities, as sentient beings, and we’ll confirm them. The legal status of persons.

You know, in the U.S., there is actually a legal path already open for that. Corporations, according to U.S. law, are legal persons. They have rights. Their freedom of speech, for instance. Now, you can incorporate an AI.

When you incorporate a corporation like Google or Facebook or whatever, until today, this was, to some extent, just make-belief, because all the decisions of the corporations had to be made by human beings, by the executive, the lawyer, the accountants. What happens if you incorporate an AI, it’s now a legal person, and it can make decisions by itself. It doesn’t need any human team to run it.

So you start having legal persons, let’s say in the U.S., which are not human, and in many ways are more intelligent than us, and they can start making money. For instance, going on TaskRabbit and offering their services to various things, like writing text, so they earn money, and then they go to the market, they go to Wall Street, and they invest that money, and because they’re so intelligent, maybe they make billions. And so you have a situation in which, perhaps, the richest person in the U.S. is not a human being, and part of their rights is that they have, under free speech, the right to make political contributions. So this AI person can contribute billions of dollars to some candidate in exchange for getting more rights to AI.

ANDREW ROSS SORKIN: So they’ll be an AI president.

The Potential for AI to Expand Our Perspective

ANDREW ROSS SORKIN: I’m hoping you’re going to leave me, leave all of us, maybe on a high note here, with something optimistic. Here’s the question, and it’s my final question. Humans are not always able to think from other perspectives. Is AI able to think from multiple perspectives?

YUVAL NOAH HARARI: I think the answer is yes. But do you think that AI will actually help us think this way?

That’s one of the positive scenarios about AI, that they will help us understand ourselves better, that their immense power will be used not to manipulate us, but to help us. And we have historical precedence for that.

The Potential for AI to Help Humanity

YUVAL NOAH HARARI: We have relationships with humans, like doctors, like lawyers, accountants, therapists, that know a lot of things about us. Some of our most private information is held by these people. And they have a fiduciary duty to use our private information and their expertise to help us. And this is not, you don’t need to invent the wheel. This is already there.

And it’s obvious that if they use it to manipulate us, or if they sell it to a third party to manipulate us, this basically gains the law. They can go to prison for that. And we should have the same thing with AI.

We talked a lot about the dangers of AI, but obviously AI has enormous positive potential. Otherwise, we would not be developing it. It could provide us with the best healthcare in history. It could prevent most car accidents. It can also, you know, you can have an armies of AI doctors and teachers and therapists who help us, including help us understand our own humanity, our own relationships, our own emotions better. This can happen if we make the right decisions in the next few years.

I would end maybe by saying that, again, it’s not that we lack the power. At the present moment, what we lack is the understanding and the attention. This is potentially the biggest technological revolution in history, and it’s moving extremely fast. That’s the key problem. It’s just moving extremely fast.

If you think about the US elections, the coming US elections, so whoever wins the elections, over the next four years, some of the most important decisions they will have to make would be about AIs and regulating AIs and AI safety and so forth. And it’s not one of the main issues in the presidential debates. It’s not even clear what is the difference, if there is one, between Republicans and Democrats on AI.

So on specific issues, we start seeing differences when it comes to issues of freedom of speech and regulation and so forth. But about the broader question, it’s not clear at all. And again, the biggest danger of all is that we will just rush forward without thinking and without developing the mechanisms to slow down or to stop, if necessary.

If you think about it like a car, so when they taught me how to drive a car, the first thing I learned is how to use the brakes. That’s the first thing I think they teach most people. Only after you know how to use the brakes, they teach you how to use the fuel pedal, the accelerator.

And it’s the same when you learn how to ski. I never learned how to ski, but people who had told me, the first thing they tell you, how to stop, how to fall. It’s a bad idea to first teach you how to kind of, okay, go faster, and then when you’re down the slope, they start shouting, okay, this is how you stop. And this is what we’re doing with AI. Like you have this chorus of people in places like Silicon Valley, let’s go as fast as we can. If there is a problem down the road, we’ll figure it out how to stop. It’s very, very dangerous.

Yuval Noah Harari’s Relationship with Technology

ANDREW ROSS SORKIN: Yuval, before you go, let me ask you one final, final question, and it’s news we could all use. You are writing this whole book about AI and technology, and you do not carry a smartphone. Is this true?

YUVAL NOAH HARARI: I have a kind of emergency smartphone because various services.

ANDREW ROSS SORKIN: How does your whole life work? But I don’t carry it with me. I told you, you do not carry a phone, you don’t have an email, the whole thing.

YUVAL NOAH HARARI: No, I have email, I’m sure.

ANDREW ROSS SORKIN: So you have email.

YUVAL NOAH HARARI: I don’t, and I try to use technology, but not to be used by it. And part of the answer is that I have a team who is carrying the smartphone and doing all this for me. So it’s not so fair to say that I don’t have it. But I think on a bigger issue, what we can say, it’s a bit like with food.

That 100 years ago, food was scarce. So people ate whatever they could, and if they found something full of sugar and fat, they ate as much of it as possible because it gave you a lot of energy. And now we are flooded by enormous amounts of food and junk food, which is artificially pumped full of fat and sugar, and it’s creating immense health problems. And most people have realized that more food is not always good for me, and that I need to have some kind of diet.

And it’s exactly the same with information. We need an information diet. But previously, information was scarce, so we consumed whatever we could find. Now we are flooded by enormous amounts of information, and much of it is junk information, which is artificially filled with hatred and greed and fear.

And we basically need to go on an information diet and consume less information and be far more mindful about what we put inside. That information is the food for the mind, and you feed your mind with unhealthy information, you’ll have a sick mind. It’s as simple as that.

ANDREW ROSS SORKIN: Well, we want to thank you, Bala. Add Nexus to your information diet because it’s an important document about our future and our world. I want to thank you for this fascinating conversation.

YUVAL NOAH HARARI: Thank you, and thank you for all your questions. Thank you.

Related Posts

- Neil deGrasse Tyson on UFO Files, Trump & Alien Existence (Transcript)

- Professor John Lennox: AI Is Humanity’s Attempt to Make God (Transcript)

- What’s Changing, What Kids Must Learn w/ Sinead Bovell @ SXSW (Transcript)

- How Emotions Are Made: The Secret Life of the Brain – Dr Lisa Feldman Barrett (Transcript)

- The AI-Generated Intimacy Crisis – Bryony Cole (Transcript)