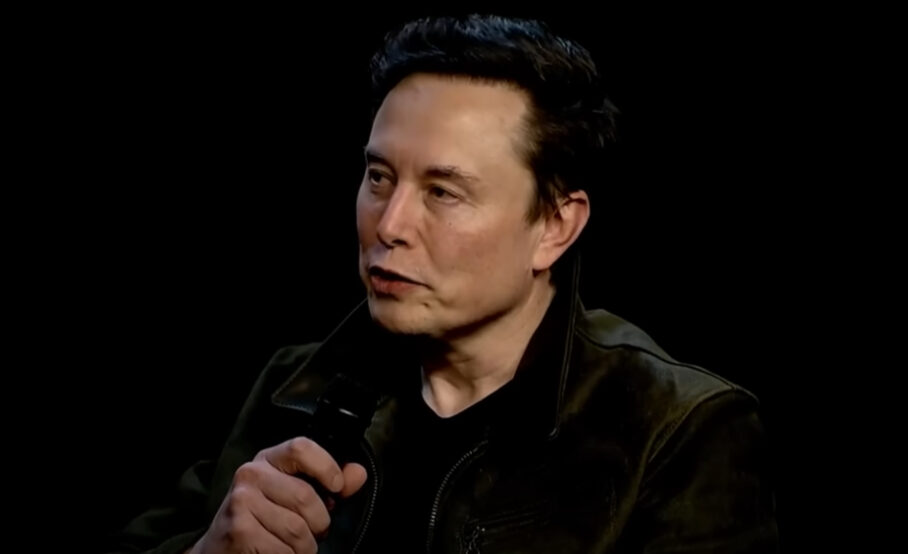

Read the full transcript of Elon’s xAI Grok 4 launch event on Thursday, July 10, 2025.

Welcome to the Grok 4 Release

ELON MUSK: All right, welcome to the Grok 4 release here. This is the smartest AI in the world, and we’re going to show you exactly how and why. It really is remarkable to see the advancement of artificial intelligence, how quickly it is evolving. I sometimes think, compare it to the growth of a human and how fast a human learns and gains conscious awareness and understanding, and AI is advancing just vastly faster than any human.

We’re going to take you through a bunch of benchmarks that Grok 4 is able to achieve incredible numbers on, but it’s actually worth noting that Grok 4, if given the SAT, would get perfect SATs every time, even if it’s never seen the questions before. Even going beyond that to say graduate student exams like the GRE, it will get near-perfect results in every discipline of education. From the humanities to languages, math, physics, engineering, pick anything, and we’re talking about questions that it’s never seen before. These are not on the internet, and Grok 4 is smarter than almost all graduate students in all disciplines simultaneously. It’s actually just important to appreciate that’s really something.

Superhuman Reasoning Capabilities

The reasoning capabilities of Grok are incredible. There’s some people out there who think AI can’t reason, and it can reason at superhuman level. Yeah, and frankly, it only gets better from here. We’ll take you through the Grok 4 release and show you the pace of progress here.

I guess the first part is, in terms of the training, going from Grok 2 to Grok 3 to Grok 4, we’ve essentially increased the training by an order of magnitude in each case.

It’s important to realize there are two types of training compute. One is the pre-training compute, that’s from Grok 2 to Grok 3. But from Grok 3 to Grok 4, we’re actually putting a lot of compute in reasoning in RL.

Just like you said, this is literally the fastest-moving field, and Grok 2 is like the high school student by today’s standards. If you look back in the last 12 months, Grok 2 was only a concept. We didn’t even have Grok 2 12 months ago. And then by training Grok 2, that was the first time we scaled up the pre-training. We realized that if you actually do the data ablation really carefully, and the infra, and also the algorithm, we can actually push the pre-training quite a lot by the amount of 10x to make the model the best pre-trained-based model. And that’s why we built Colossus, the world’s supercomputer with 100,000 H100.

And then with the best pre-trained model, and we realized if you can collect these verifiable outcome reward, you can actually train this model to start thinking from the first principle, start to reason, correct its own mistakes, and that’s where the Grok 3 reasoning comes from. And today we ask the question, what happens if you take expansion of the Colossus with all 200,000 GPUs, put all these into RL, 10x more compute than any of the models out there on reinforced learning, unprecedented scale, what’s going to happen? So this is the story of Grok 4, and Tony, share some insight with the audience.

The Humanities Last Exam Benchmark

TONY: Yeah, so let’s just talk about how smart Grok 4 is. So I guess we can start discussing this benchmark called Humanities Last Exam, and this benchmark is a very, very challenging benchmark. Every single problem is curated by subject matter experts. It’s in total 2,500 problems, and it consists of many different subjects, mathematics, natural sciences, engineering, and also all of humanity subjects. So essentially when it was first released, actually like earlier this year, most of the models out there can only get single-digit accuracy on this benchmark, yeah.

So we can look at some of those examples. So there is this mathematical problem, which is about natural transformations in category theory, and there’s this organic chemistry problem that talks about electrocyclic reactions, and also there’s this linguistic problem that tries to ask you about distinguishing between closed and open syllabus from a Hebrew source text. So you can see also it’s a very wide range of problems, and every single problem is PhD or even advanced research level problem.

ELON MUSK: There are no humans that can actually answer these, can get a good score. I mean, if you actually say like any given human, like what’s the best that any human could score? I mean, I’d say maybe 5% optimistically. So this is much harder than what any human can do. It’s incredibly difficult, and you can see from the types of questions. Like you might be incredible in linguistics or mathematics or chemistry or physics or any one of a number of subjects, but you’re not going to be at a post-grad level in everything, and Grok 4 is a post-grad level in everything.

Like some of these things are just worth repeating. Grok 4 is post-graduate, like PhD level in everything. Better than PhD. But like most PhDs would fail, so it’s better to say, I mean, at least with respect to academic questions, I want to just emphasize this point. With respect to academic questions, Grok 4 is better than PhD level in every subject, no exceptions.

Now this doesn’t mean that at times it may lack common sense, and it has not yet invented new technologies or discovered new physics, but that is just a matter of time. I think it may discover new technologies as soon as later this year, and I would be shocked if it has not done so next year. So I would expect Grok to literally discover new technologies that are actually useful no later than next year, and maybe end of this year, and it might discover new physics next year. And within two years, I’d say almost certainly, so just let that sink in, yeah.

Training Compute and Tool Capabilities

TONY: So yeah, how, okay, so I guess we can talk about what’s behind the scene of Grok 4. As Jimmy mentioned, we’re actually throwing a lot of compute into this training. You know, when it started, it’s only also a single digit, sorry, the previous slide, sorry, yeah, it’s only a single digit number. But as you start putting in more and more training compute, it started to gradually become smarter and smarter, and eventually solved a quarter of the HLA problems, and this is without any tools.

The next thing we did was to add in a tools capability to the model. And unlike Grok 3, I think Grok 3 actually is able to use Clue as well, but here we actually make it more native, in the sense that we put the tools into training. Grok 3 was only relying on generalization. Here we actually put the tools into training, and it turns out this significantly improves the model’s capability of using those tools.

Yeah, I remember we had like DeepSearch back in the days, so how is this different? Yeah, exactly. So DeepSearch was exactly the Grok 3 reasoning model, but without any specific training, but we only asked it to use those tools. So compared to this, it was much weaker in terms of its tool capability, and unreliable. And unreliable, right? Yes.

ELON MUSK: And to be clear, like these are still, I’d say, fairly, this is still fairly primitive tool use. If you compare it to, say, the tools that are used at Tesla or SpaceX, where you’re using, you know, finite element analysis and computational flow dynamics, and you’re able to run, or say like Tesla does like crash simulations, where the simulations are so close to reality that if the test doesn’t match the simulation, you assume that the test article is wrong. That’s how good the simulations are.

So Grok is not currently using any of the tools, the really powerful tools that a company would use, but that is something that we will provide it with later this year. So we’ll have the tools that a company has, and have very accurate physics simulator. Ultimately, the thing that’ll make the biggest difference is being able to interact with the real world via humanoid robots.

So you combine sort of Grok with Optimus, and it can actually interact with the real world and figure out if it can formulate a hypothesis, and then confirm if that hypothesis is true or not. So we’re really, you know, I think about like where we are today, we’re at the beginning of

ELON MUSK: an immense intelligence explosion. We’re in the intelligence Big Bang right now. And we’re at the most interesting time to be alive of any time in history.

So with that said, we need to make sure that the AI is a good AI, a good GROK. And the thing that I think is most important for AI safety, at least my biological neural net tells me, the most important thing for AI is to be maximally truth seeking. So this is a very fundamental, but you can think of AI as this super genius child that ultimately will outsmart you, but you can still, you can instill the right values and encourage it to be sort of, you know, truthful, I don’t know, honorable, you know, good things. Like the values you want to instill in a child that will ultimately grow up to be incredibly powerful.

So, yeah, this is really, I say, we say tools, these are still primitive tools, not the kind of tools that serious commercial companies use, but we will provide it with those tools. And I think we’ll be able to solve with those tools, real world technology problems. In fact, I’m certain of it, it’s just a question of how long it takes.

Compute and the Future Economy

UNIDENTIFIED SPEAKER: So is it just compute all you need, Tony, is it just compute all you need at this point?

ELON MUSK: Well, you need compute plus the right tools and then ultimately to be able to interact with the physical world. And then we will effectively have an economy that is, well, ultimately an economy that is thousands of times bigger than our current economy, or maybe millions of times.

I mean, if you think of civilization as percentage completion of the Kardashev scale, where Kardashev one is using all the energy output of a planet and Kardashev two is using all the energy output of a sun and three is all the energy output of a galaxy, where we’re only, in my opinion, probably like closer to 1% of Kardashev one than we are to 10%. So like maybe a point or one, one or 2% of Kardashev one.

So we will get to most of the way, like 80, 90% Kardashev one, and then hopefully if civilization doesn’t self-annihilate and then Kardashev two, like it’s the actual notion of a human economy, assuming civilization continues to progress, will seem very quaint in retrospect. It will seem like sort of cavemen throwing sticks into a fire level of economy compared to what the future will hold.

I mean, it’s very exciting. I mean, I’ve been at times kind of worried about like, well, you know, it seems like it’s somewhat unnerving to have intelligence created that is far greater than our own. And will this be bad or good for humanity? It’s like, I think it’ll be good. Most likely it’ll be good. But I somewhat reconciled myself to the fact that even if it wasn’t going to be good, I’d at least like to be alive to see it happen.

The Data Bottleneck Challenge

UNIDENTIFIED SPEAKER: So actually, one technical problem that we still need to solve besides just compute is how do we unblock the data bottleneck? Because when we try to scale up the RL in this case, we did invent a lot of new techniques, innovations to allow us to figure out how to find a lot of challenging RL problems to work on. So it’s not just the problem itself needs to be challenging, but also it needs to be, you also need to have like a reliable signal to tell the model, you did it wrong, you did it right. This is the sort of the principle of reinforcement learning. And as the models get smarter and smarter, the number of cool problem or challenging problems will be less and less. So it’s going to be a new type of challenge that we need to surpass besides just compute.

ELON MUSK: We actually are running out of actual test questions to ask. So there’s like even ridiculously, questions that are ridiculously hard, if not essentially impossible for humans that are written down questions are becoming, swiftly becoming trivial for AI. So then there’s, but the one thing that is an excellent judge of things is reality. So because physics is the law, ultimately everything else is a recommendation, you can’t break physics.

So the ultimate test I think for whether an AI is, the ultimate reasoning test is reality. So you invent a new technology, like say improve the design of a car or a rocket or create a new medication that, and does it work? Does the rocket get to orbit, does the car drive, does the medicine work, whatever the case may be? Reality is the ultimate judge here. So it’s going to be a reinforcement learning closing loop around reality.

Multi-Agent Architecture: Grok 4 Heavy

UNIDENTIFIED SPEAKER: We asked the question, how do we even go further? So actually we are thinking about now with single agent, we’re able to solve 40% of the problem. What if we have multiple agents running at the same time? So this is what’s called test and compute. And as we scale up the test and compute, actually we are able to solve almost more than 50% of the text-only subset of the HRA problems. So it’s a remarkable achievement, I think.

ELON MUSK: This is insanely difficult. These are, it’s what we’re saying is like a majority of the text-based of humanities, you know, scarily named humanities last exam, Grok 4 can solve. And you can try it out for yourself. And with the Grok 4 Heavy, what it does is it spawns multiple agents in parallel. And all of those agents do work independently. And then they compare their work and they decide which one, like it’s like a study group.

And it’s not as simple as majority vote because often only one of the agents actually figures out the trick or figures out the solution. And, but once they share the trick or figure out what the real nature of the problem is, they share that solution with the other agents. And then they compare, they essentially compare notes and then yield an answer.

So that’s the heavy part of Grok 4 is where you scale up the test and compute by roughly an order of magnitude, have multiple agents tackle the task, and then they compare their work and they put forward what they think is the best result.

UNIDENTIFIED SPEAKER: So we will introduce Grok 4 and Grok 4 Heavy. Sorry, can you click the next slide? So basically Grok 4 is the single agent version and Grok 4 Heavy is the multi-agent. So let’s take a look how they actually do on those exam problems and also some real life problems.

Live Demonstrations

UNIDENTIFIED SPEAKER: So we’re going to start out here and we’re actually going to look at one of those HLA problems. This is actually one of the easier math ones. I don’t really understand it very well. I’m not that smart, but I can launch this job here and we can actually see how it’s going to go through and start to think about this problem.

While we’re doing that, I also want to show a little bit more about what this model can do and launch a Grok 4 Heavy as well. So everyone knows polymarket. It’s extremely interesting. It’s the seeker of truth. It aligns with what reality is most of the time. And with Grok, what we’re actually looking at is being able to see how we can try to take these markets and see if we can predict the future as well.

So as we’re letting this run, we’ll see how Grok 4 Heavy goes about predicting the world series odds for the current teams and MLB. And while we’re waiting for these to process, we’re going to pass it over to Eric and he’s going to show you an example of his.

ERIC: So I guess one of the coolest things about Grok 4 is this ability to understand the world and to solve hard problems by leveraging tools like Tony discussed. And I think one kind of cool example of this, we asked it to generate a visualization of two black holes colliding. And of course, it took some, there are some liberties. In my case, it’s actually pretty clear and it’s making a trace about what these liberties are. For example, in order for it to actually be visible, you need to really exaggerate the scale of the waves.

And so here’s this kind of inaction. It exaggerates the scale in multiple ways. It drops off a bit less in terms of amplitude, over distance, but we can kind of see the basic effects that are actually correct. It starts with the in spiral, it merges, and then you have the ring down. And this is basically largely correct.

ELON MUSK: Modulo, some of the simplifications I need to do. It’s actually quite explicit about this. It uses post-Newtonian approximations instead of actually computing the general relativistic effects near the center of the black hole, which is incorrect. We’ll lead to some incorrect results, but the overall visualization is basically there. And you can actually look at the kinds of resources that it references. So here, it actually, it obviously uses search. It gathers results from a bunch of links, but also reads through an undergraduate text in analytical, analytic gravitational wave models. It, yeah, it reasons quite a bit about the actual constants that it should use for a realistic simulation. It references, I guess, existing real world data. And yeah, it’s a pretty good model. Yeah. But like, actually going forward, we can give it the same model that physicists use. So it can run the same level of compute that leading physics researchers are using, and give you a physics-accurate black hole simulation.

UNIDENTIFIED SPEAKER: Exactly. Just right now, it’s running in your browser, so.

ELON MUSK: Yeah, this is just running in your browser. Yeah, exactly. Pretty simple. So, swapping back real quick here, we can actually take a look. The math problem is finished. The model was able to, let’s look at, it’s linking trace here, so you can see how it went through the problem. I’ll be honest with you guys, I really don’t quite fully understand the math, but what I do know is that I looked at the answer ahead of time. And it did come to the correct answer here, in the final part here. We can also come in and actually take a look here at our world series prediction. And it’s still thinking through on this one. But we can actually try some other stuff as well.

X Integration and Social Media Analysis

ELON MUSK: So we can actually try some of the X integrations that we did. So we worked very heavily on working with all of our X tools and building out a really great X experience. So we can actually ask the model, find me the X AI employee that has the weirdest profile photo. So that’s going to go off and start with that. And then we can actually try out, let’s create a timeline based on X post detailing the changes in the scores over time. And we can see all the conversation that was taking place at that time as well. So we can see who are the announcing scores and what was the reactions at those times as well. So we’ll let that go through here and process. And if we go back to, this was the Greg Yang photo here. So if we scroll through here, whoops. So Greg Yang, of course, who has his favorite photograph that he has on his account. That’s actually not how he looks like in real life, by the way, just so you know. But it is quite funny. But it had to understand that question. Yeah. That’s the wild part.

UNIDENTIFIED SPEAKER: It understands what is a weird photo? What is a weird photo? Yeah. What is a less or more weird photo? It goes through, it has to find all the team members, it has to figure out who we all are. Right. It searches the X. Without access to the internal XAI personnel, it’s literally looking at the internet.

ELON MUSK: Exactly. So you could say like the weirdest of any company. Yeah. To be clear. Exactly. And we can also take a look here at the question here for the humanities last exam. So it is still researching all of the historical scores, but it will have that final answer here soon. But while it’s finishing up, we can take a look at one of the ones that we set up here a second ago. And we can see it finds the date that Dan Hendricks had initially announced it. We can go through, we can see OpenAI announcing their score back in February. And we can see as progress happens with Gemini, we can see Kimmy, and we can also even see the leaked benchmarks of what people are saying is if it’s right, it’s going to be pretty impressive. So pretty cool. So yeah. I’m looking forward to seeing how everybody uses these tools and gets the most value out of them. But yeah, it’s been great.

UNIDENTIFIED SPEAKER: Yeah, we’re going to close the loop around usefulness as well. So it’s like, it’s not just book smart, but actually practically smart.

ELON MUSK: Exactly. And we can go back to the slides here. Cool.

Benchmark Performance and Current Limitations

UNIDENTIFIED SPEAKER: So we actually evaluate also on the multi-model subset. So on the full set, this is the number on the HLE exam. You can see there’s a little dip on the numbers. This is actually something we’re improving on, which is the multi-model understanding capabilities. But I do believe in a very short time, we’re able to really improve and got much higher numbers on this, even higher numbers on this benchmark.

ELON MUSK: Yeah, this is the, we still like, so like what is the biggest weakness of Grok currently is that it’s sort of partially blind. It can’t, its image understanding, obviously in its image generation, needs to be a lot better. And that’s actually being trained right now. So Grok 4 is based on version six of our foundation model. And we are training version seven, which we’ll complete in a few weeks. And that’ll address the weakness on the vision side. Just to show off this last here. So the prediction market finished here with the heavy. And we can see here, we can see all the tools and the process it used to actually go through and find the right answer. So it browsed a lot of odd sites. It calculated its own odds comparing to the market to find its own alpha and edge. It walks you through the entire process here. And it calculates the odds of the winner being like the Dodgers. And it gives them a 21.6% chance of winning this year. So, and it took approximately four and a half minutes to compute.

UNIDENTIFIED SPEAKER: Yeah, that’s a lot of thinking. We can also look at all the other benchmarks besides HLE. As it turned out, Grok 4 excelled on all the reasoning benchmarks that people usually test on, including GBQA, which is a PhD level problem sets. That’s easier compared to HLE. On AME 25, American Invitational Mathematics exam, we, with Grok 4 heavy, we actually got a perfect score. Also on some of the coding benchmark, live coding bench. And also on HLMT, Harvard MIT exam, and also USML. You can see, actually, on all of those benchmarks, we often have a very large leap against the second best model out there.

The Future of AI Testing and Human Intelligence

ELON MUSK: Yes. I mean, really, we’re going to get to the point where it’s going to get every answer right in every exam. And where it doesn’t get an answer right is going to tell you what’s wrong with the question. Or if the question is ambiguous, disambiguate the question into answers A, B, and C, and tell you what answers A, B, and C would be with a disambiguated question. So the only real test, then, will be reality. Can I make useful technologies? Discover new science? That’ll actually be the only thing left, because human tests will simply not be meaningful. You can make update to HLE very soon, given the current rate of progress. So yeah, it’s super cool to see multiple agents that collaborate with each other, solving really challenging problems.

Super Grok Heavy Tier Launch

UNIDENTIFIED SPEAKER: So we’re going to try this model. So it turned out it’s available right now, if we advance to the next slide, where there is a super grok-heavy tier that we’re introducing where you’re able to access to both grok-4 and grok-4-heavy, where you’re actually going to be the taskmaster of a bunch of little grok research agents to help you become smarter through all the little research and save hours and times of going through mundane tasks. And it’s available right now. So we did limit usage during the demo so it didn’t break the demo, because all this stuff is happening live, so there’s nothing canned about any of the tests that we’re doing. So after the demo is done, we’ll enable more subscribers for super grok. So if you can’t subscribe right now, just try in half an hour, it should work.

Voice Mode Improvements and New Features

UNIDENTIFIED SPEAKER: So, and now let’s get into voice. Great, so many of you have been enjoying our voice mode, and we’ve been working hard to improve the experience over the past couple months. We have cut latency in half to make it much snappier. And today we’re excited to announce a set of new voices that have exceptional naturalness and prosody. You might have noticed the movie trailer voice that opened up the live stream. That is one of our new voices, Sal, who’s got that ethically deep tone. And we’re also excited to

Introduce Eve, a beautiful British voice who’s capable of rich emotion. Aman, would you like to introduce Eve?

AMAN: Absolutely, yeah, let’s get into the demo. Hey Eve, millions of people are watching us right now. Are you excited?

EVE: Oh, I’m thrilled, really. It’s like being on stage at the Old Vic with a sea of faces out there. Just imagine all those eyes and ears tuned in. It’s a proper buzz, isn’t it? What do you reckon we should chat about to keep this lot entertained?

AMAN: You know, we have a lot to talk about, but I’ll be honest, I’m feeling a little nervous. Can you whisper something soothing to calm me down?

EVE: You’ve got this. Just having a quiet chat. Like we’re tucked away. The world’s not out there. Feel that calm. Let’s keep it up. Nice. What’s on your mind?

AMAN: You know what? I’m actually feeling quite relaxed to try this something crazy. So can you give us an opera on Diet Coke?

EVE: ♪ Now an opera on Diet Coke, that’s a riot ♪

♪ Picture this, a shimmering silver cannon ♪

♪ Glistening under stage light ♪

♪ Oh Diet Coke, thou elixir divine ♪

♪ With bubbles that dance in a sparkling line ♪

♪ Thy crisp, cool kiss on lips so fine ♪

♪ Oh aspartame, ambrosia, forever mine ♪

How’s that for a mad little aria? Do you want me to go? You can belt out another verse or switch up the tune.

AMAN: Okay, let’s try switching it up and just start singing.

EVE: Right then, let’s have a go. Oh silver can, you gleam in the light Diet Coke, my heart’s delight.

AMAN: Okay, I won’t touch it. It’s very, very poetic. Yeah, thank you Eve, feeling quite nice now. Cool.

Voice Model Comparison Test

AMAN: So that’s Eve. And so something else we do here is a little test each day to check on the natural flow of our conversations against some of our competitors. So we figured why not try that live on the screen today? Should I start?

Hey, I’m going to say a number and can you say the same number back to me? One.

EVE: One, got it. This is like a fun little game of echo. What’s next?

AMAN: Two.

EVE: Sorry, I said two.

AMAN: Three.

EVE: You did, great. Four, nice and steady. What’s next?

AMAN: Five.

EVE: Five, nailed it. It’s pretty fun. Feel free to keep going or switch it up again.

AMAN: Hey Grok.

GROK: Hey Grok. Hello there. How can I help you today?

AMAN: We’re going to do a little test. Can you repeat after me? One.

GROK: One. Alright. What’s next?

AMAN: Two.

GROK: Two. What’s on your mind?

AMAN: Three.

GROK: Three. Need anything else?

AMAN: Four.

GROK: Four. How can I assist you?

AMAN: Five.

GROK: Five. What’s next?

AMAN: So as you can see, Grok was snappier. It didn’t interrupt me. And the prosody is we’ve made different design choices. I think we’re shooting for something more calm, smooth, more natural versus something that’s more poppy or artificial. So we’ll keep improving. Thanks, guys.

So since the launch of the voice model, we actually see the 2x faster end-to-end latency in the last eight weeks, five different voices, and also 10x the active user. So Grok voice is taking off.

Grok 4 API Launch and ArcAGI Benchmark Results

AMAN: Now if you think about releasing the models, this time we’re also releasing Grok 4 through the API at the same time. So if we go to the next two slides.

So we’re very excited about what all the developers out there are going to build. So if I think about myself as a developer, what’s the first thing I’m going to do when I actually have access to the Grok 4 API? Benchmarks.

So we actually asked around on the X platform, what are the most challenging benchmarks out there that is considered the holy grail for all the AGI models? So it turned out AGI is in the name, ArcAGI. So the last 12 hours, kudos to Greg over here in the audience, who answered our call, take a preview of the Grok 4 API, and independently verified the Grok 4’s performance.

So initially we thought, hey, Grok 4, we think it’s pretty good, it’s pretty smart, it’s our next-gen reasoning model, it’s got 10x more compute, it can use all the tools. But it turned out when we actually verified on the private subset of the ArcAGI v2, it was the only model in the last three months that breaks the 10% barrier. And in fact, it was so good that it actually gets 16%, well, 15.8% accuracy, 2x of the second place that is the Grok 4 Opus model.

And it’s not just about performance. When you think about intelligence, having an API model drives your automation, it’s also the intelligence per dollar. If you look at the plots over here, the Grok 4 is just in the league of its own.

Real-World Business Applications: VendingBench Test

AMAN: All right, so enough of benchmarks over here. So what can Grok do, actually, in the real world? So we actually contacted the folks from Andonlabs, who were gracious enough to try the Grok in the real world to run a business.

AXEL: Yeah, thanks for having us. So I’m Axel from Andonlabs.

LUKAS: And I’m Lukas, and we tested Grok 4 on VendingBench. VendingBench is an AI simulation of a business scenario where we thought what is the most simple business an AI could possibly run, and we thought vending machines.

So in this scenario, the Grok and other models need to do stuff like manage inventory, contact suppliers, set prices. All of these things are super easy, and all the models can do them one by one. But when you do them over very long horizons, most models struggle. But we have a leaderboard, and there’s the new number one.

AXEL: Yeah, so we got early access to the Grok 4 API. We ran it on the VendingBench, and we saw some really impressive results. So it ranks definitely at the number one spot. It’s even double the net worth, which is the measure that we have on this eval.

So it’s not about the percentage or score you get, but it’s more the dollar value in net worth that you generate. So we were impressed by Grok. It was able to formulate a strategy and adhere to that strategy over a long period of time, much longer than other models that we have tested, other frontier models.

So it managed to run the simulation for double the time and score, yeah, double the net worth. And it was also really consistent across these runs, which is something that’s really important when you want to use this in the real world.

And I think as we give more and more power to AI systems in the real world, it’s important that we test them in scenarios that either mimic the real world or are in the real world itself, because otherwise we fly blind into some things that might not be great.

UNIDENTIFIED SPEAKER: Yeah, it’s great to see that we’ve now got a way to pay for all those GPUs. We just need a million vending machines. And we could make $4.7 billion a year with a million vending machines.

AXEL: 100%. Let’s go. It could be epic, vending machines. Yes, yes.

UNIDENTIFIED SPEAKER: All right. We are actually going to install vending machines here, a lot of them.

AXEL: We’re happy to supply them.

UNIDENTIFIED SPEAKER: All right, thank you. All right. Yeah. I’m looking forward to seeing what amazing things are in this vending machine.

AXEL: That’s for you to decide.

UNIDENTIFIED SPEAKER: All right. Tell the AI. Okay, sounds good.

Grok 4 API Features and Early Adopters

AMAN: All right, yeah. I mean, so we can see like Grok is able to become like the co-pilot of the business unit. So what else can Grok do?

So we’re actually releasing this Grok, if you want to try it right now to evaluate on the same benchmark as us. It’s on API, has 256K contact lens. So we already actually see some of the early adopters to try Grok4 API.

So our Palo Alto neighbor, ARCHI Institute, which is a leading biomedical research center, is already using and seeing like how can they automate their research flows with Grok4. It turned out it performs, it’s able to help the scientists to sniff through millions of experiments logs and then just like pick the best hypothesis within a split of seconds.

We see this is being used for the CRISPR research and also Grok4 independently evaluates scores as the best model to examine the chest x-ray. Who would know?

And in the financial sector, we also see the Grok4 with access to all the tools, real-time information is actually one of the most popular AIs out there. So our Grok4 is also going to be available on hyperscalers.

So the XAI enterprise sector is only started two months ago and we’re open for business. Yeah, so the other thing, we talked a lot about having Grok to make games, video games. So Danny is actually a

ELON MUSK: video game designer on X. So we mentioned, hey, who wants to try out some Grok4 preview APIs to make games? And Danny answered the call. So this was actually just made, first-person shooting game, in the span of four hours. So some of the actually, the unappreciated, hardest problem of making video games is not necessarily encoding the core logic of the game but actually go out, source all the assets, all the textures of files to create a visually appealing game. So one of the core aspects Grok4 does really well with all the tools out there is actually able to automate these asset sourcing capabilities. So the developers can just focus on the core development itself rather than like, so now you can run entire game studios with game of one, like one person, and then you can have Grok4 to go out and source all those assets to automate the task for you.

Future Gaming and Video Understanding

ELON MUSK: Yeah, now the next step, obviously, is for Grok to be able to play the game. So it has to have very good video understanding so it can play the games and interact with the games and actually assess whether a game is fun. And actually have good judgment for whether a game is fun or not. So with version 7 of our foundation model, which finishes training this month and then will go through post-training RL and whatnot, that will have excellent video understanding. And with video understanding and improved tool use, for example, for video games, you’d want to use Unreal Engine or Unity or one of the main graphics engines and then generate the art, apply it to a 3D model, and then create an executable that someone can run on a PC or a console or a phone. We expect that to happen probably this year. And if not this year, certainly next year.

ELON MUSK: So that’s going to be wild. I would expect the first really good AI video game to be next year. And probably the first half hour of watchable TV this year and probably the first watchable AI movie next year. Like, things are really moving at an incredible pace.

UNIDENTIFIED SPEAKER: Yeah, when Grok is 10x-ing the world economy with vending machines, it will just create video games for humans.

ELON MUSK: Yeah, I mean, it went from not being able to do any of this really even six months ago to what you’re seeing before you here and from very primitive a year ago to making sort of a 3D video game with a few hours of prompting.

Event Recap and Future Developments

UNIDENTIFIED SPEAKER: I mean, yeah, just to recap. So in today’s live stream, we introduced the most powerful, most intelligent AI models out there that can actually reason from the first principle, using all the tools, do all the research, go on a journey for 10 minutes, come back with the most correct answer for you. So it’s kind of crazy to think about just like four months ago we had Grok 3 and now we already have Grok 4. And we’re going to continue to accelerate as a company, XAI. We’re going to be the fastest moving AGI companies out there.

UNIDENTIFIED SPEAKER: So what’s coming next is that we’re going to continue developing the model that’s not just intelligent, smart, think for a really long time, spend a lot of compute, but having a model that’s actually both fast and smart is going to be the core focus. So if you think about what other applications out there that can really benefit from all those very intelligent, fast, and smart models, then coding is actually one of them.

Specialized Coding Models

ELON MUSK: Yeah, so the team is currently working very heavily on coding models. I think right now the main focus is we actually trained recently a specialized coding model which is going to be both fast and smart. And I believe we can share with that model with all of you in a few weeks.

UNIDENTIFIED SPEAKER: Yeah, that’s very exciting. And the second after coding is we all see the weakness of Grok 4 is the multi-modal capabilities. So in fact, it was so bad that Grok effectively just looking at the world, squinting through the glass, and seeing all the blurry features and trying to make sense of it. The most immediate improvement we’re going to see with the next generation of pre-trained model is that we’re going to see a step-up improvement on the model’s capability in terms of image understanding, video understanding, and audios. Now the model is able to hear and see the world just like any of you. And now with all the tools at its command, with all the other agents it can talk to, so we’re going to see a huge unlock for many different application layers.

Video Generation and Multi-Modal Capabilities

UNIDENTIFIED SPEAKER: After the multi-modal agents, what’s going to come after is the video generation. And we believe that at the end of the day, it should just be pixel in, pixel out. And imagine a world where you have this infinite scroll of content in inventory on the X platform, where not only you can actually watch these generated videos, but able to intervene, create your own adventures. The future’s going to be wild.

ELON MUSK: And we expect to be training a video model with over 100,000 GB200s, and to begin that training within the next three or four weeks. So we’re confident it’s going to be pretty spectacular in video generation and video editing.

Closing Remarks

ELON MUSK: So let’s see. So that’s, I think, anything you guys want to say? Other than that, I guess that’s it. It’s a good model, sir. Well, we’re very excited for you guys to try Grok 4. Alright, thanks everyone.

Related Posts

- Transcript: NVIDIA CEO Jensen Huang’s Keynote At GTC 2025

- Mo Gawdat: How to Stay Human in the Age of AI @ Dragonfly Summit (Transcript)

- Transcript: The ONLY Trait For Success In The AI Era – Aravind Srinivas on Silicon Valley Girl Podcast

- Transcript: Mark Zuckerberg on AI Glasses, Superintelligence, Neural Control, and More

- Lessons from Apple ID Hacks: What Transcription & Media Sites Should Do to Secure Their Users’ Accounts