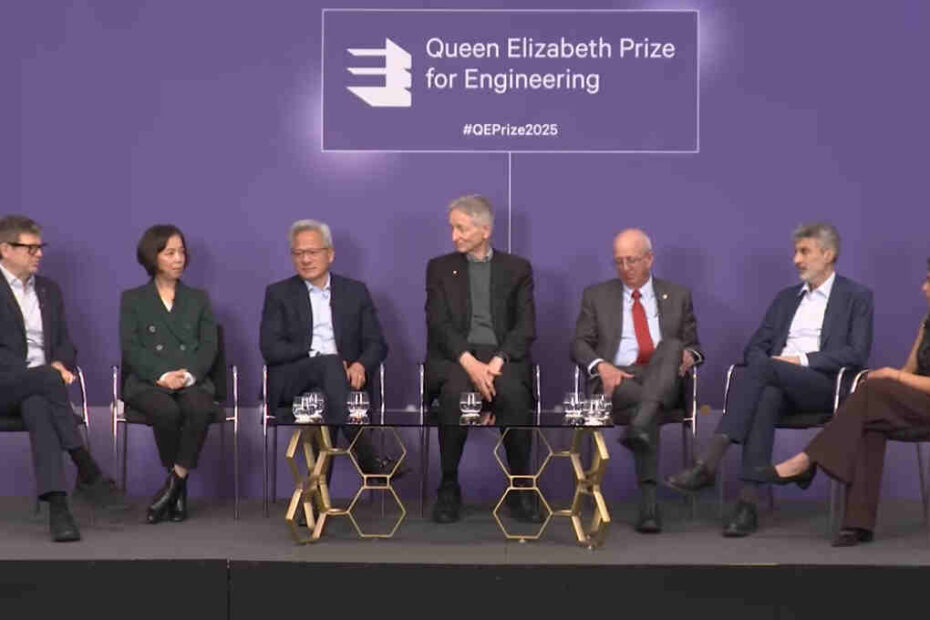

On November 6, 2025, Jensen Huang, Yoshua Bengio, Geoffrey Hinton, Fei-Fei Li, Yann LeCun, and Bill Dally spoke with the FT’s AI editor, Madhumita Murgia at the FT Future of AI Summit in London. Following is the full transcript of the conversation.

Introduction

Madhumita Murgia: Hello, everybody. Good afternoon, good morning. And I am delighted to be the one chosen to introduce to you this really distinguished group of people that we’ve got here sitting around the table. Six, I think, of the most brilliant, most consequential people on the planet today. And I don’t think that’s an overstatement.

So these are the winners of the 2025 Queen Elizabeth Prize for Engineering. And it honors the laureates here that we see today for their singular impact on today’s artificial intelligence technology. Given your pioneering achievements in advanced machine learning and AI, and how the innovations that you’ve helped build are shaping our lives today, I think it’s clear to everyone why this is a really rare and exciting opportunity to have you together around the table.

For me personally, I’m really excited to hear you reflect on this present moment that we’re in, the one that everybody’s trying to get ahead of and understand. And your journey, the journey that brought you here today.

But also to understand how your work and you as individuals have influenced and impacted one another, and the companies and the technologies that you’ve built. And finally, I’d love to hear from you to look ahead and to help us all see a bit more clearly what is to come, which you are in the best position to do.

So I’m so pleased to have you all with us today and looking forward to the discussion.

Personal Moments of Awakening

Madhumita Murgia: So I’m going to start going from the zooming out to the very personal. I want to hear from each of you a personal moment in your career that you’ve had that you think has impacted the work that you’ve done, or was a turning point for you that brought you on this path to why you’re sitting here today? Whether it was kind of early in your career and your research or much more recently, what was your personal moment of awakening that has impacted the technology? Should we start here with you, Yoshua?

# Yoshua Bengio’s Two Defining Moments

Yoshua Bengio: Thank you. Yes, with pleasure. I would go to two moments.

One, when I was a grad student and I was looking for something interesting to research on, and I read some of Jeff Hinton’s early papers, and I thought, “Wow, this is so exciting. Maybe there are a few simple principles like the laws of physics that could help us understand human intelligence and help us build intelligent machines.”

And then the second moment I want to talk about is two and a half years ago after ChatGPT came out, and I realized, “Uh-oh, what are we doing? What will happen if we build machines that understand language, have goals, and we don’t control those goals? What happens if they are smarter than us? What happens if people abuse that power?”

So that’s why I decided to completely shift my research agenda and my career to try to do whatever I could about it.

Madhumita Murgia: That’s two kind of very diverting things, very interesting.

# Bill Dally on Building AI Infrastructure

Madhumita Murgia: Tell us about your moment of building the infrastructure that’s fueling what we have.

Bill Dally: I’ll give two moments as well. So the first was, in the late ’90s I was at Stanford trying to figure out how to overcome what was at the time called the memory wall. The fact that accessing data from memory is far more costly in energy and time than doing arithmetic on it.

And it sort of struck me to organize computations into these kernels connected by streams so you could do a lot of arithmetic without having to do very much memory access. And that basically led the way to what became called stream processing, ultimately GPU computing. And we originally built that thinking we could apply GPUs not just for graphics, but to general scientific computations.

So the second moment was I was having breakfast with my colleague Andrew Ng at Stanford. And at the time he was working at Google finding cats on the internet using sixteen thousand CPUs in this technology called neural networks, which they say had something to do with those. And he basically convinced me this is a great technology.

So I, with Brian Catanzaro, repeated the experiment on forty-eight GPUs at NVIDIA. And when I saw the results of that, I was absolutely convinced that this is what NVIDIA should be doing. We should be building our GPUs to do deep learning because this has huge applications in all sorts of fields beyond finding cats. And that was kind of a moment to really start working very hard on specializing the GPUs for deep learning and to make them more effective.

Madhumita Murgia: And when was that, what year?

Bill Dally: The breakfast was in 2010, and I think we repeated the experiment in 2011.

Madhumita Murgia: Okay.

# Geoffrey Hinton’s Early Language Model

Madhumita Murgia: Yeah. Geoff, tell us about your work.

Geoffrey Hinton: Very important moment was when I in about 1984, I tried using back propagation to learn the next word in a sequence of words. So it was a tiny language model and discovered it would learn interesting features for the meaning of the words.

So just giving it a string of symbols, it just by trying to predict the next word in a string of symbols, it could learn how to convert words into sets of features that capture the meaning of the word and have interactions between those features predict the features of the next word.

So that was actually a tiny language model from late 1984 that I think of as a precursor for these big language models. The basic principles were the same. It was just tiny.

But it took forty training examples.

Madhumita Murgia: It took forty years to get us here, though.

Geoffrey Hinton: And it took forty years to get here. And the reason it took forty years was we didn’t have the compute and we didn’t have the data. And we didn’t know that at the time. We couldn’t understand why we weren’t just solving everything with that population.

# Jensen Huang on Scaling AI

Madhumita Murgia: Which takes us cleanly to Jensen. We didn’t have the compute for forty years and here now you are building it. Tell us about your moments that are of real kind of clarity.

Jensen Huang: Well, for my career, I was the first generation of chip designers that was able to use higher level representations and design tools to design chips. And that discovery was helpful when I learned about a new way of developing software around the 2010 timeframe simultaneously from three different labs.

What was going on in University of Toronto, researchers reached out to us at the same time that researchers at the NYU reached out to us, as well as in Stanford reached out to us at the same time. And I saw the early indications of what turned out to have been deep learning around the same time using a framework and a structured design to create software. And that software turned out to have been incredibly effective.

And that second observation, seeing again, using frameworks, higher level representations, structured types of structures like the deep learning networks, was able to develop software was very similar to designing chips for me. And the patterns were very similar.

And I realized at that time, maybe we could develop software and capabilities that scale very nicely as we’ve scaled chip design over the years. And so that was quite a moment for me.

Madhumita Murgia: And when do you think was the moment when the chips really started to help scale up today’s sort of the LLMs that we have today? Because you said 2010, that’s still a fifteen year…

Jensen Huang: Yeah. The thing about NVIDIA’s architecture is once you’re able to get something to run well on a GPU because it became parallel, you could get it to run well on multiple GPUs. That same sensibility of scaling the algorithm to run on many processors on one GPU is the same logic and the same reasoning that you could do it on multiple GPUs and then now multiple systems and in fact, multiple data centers.

And so that once we realized we could do that effectively, then the rest of it is about imagining how far you could extrapolate this capability. How much data do we have? How large can the networks be? How much dimensionality can it capture? What kind of problems can it solve?

All of that is really engineering at that point. The observation that the deep learning models are so effective is really quite the spark. The rest of it is really engineering extrapolation.

# Fei-Fei Li on Data and Human-Centered AI

Madhumita Murgia: Fei-Fei, tell us about your moment.

Fei-Fei Li: Yes. I also have two moments to share. So around 2006 and 2007, I was transitioning from a graduate student to a young assistant professor. And I was among the first generation of machine learning graduate students, reading papers from Yann, Yoshua, Jeff.

And I was really obsessed in trying to solve the problem of visual recognition, which is the ability for machines to see meaning in objects in everyday pictures. And we were struggling with this problem in machine learning called generalizability, which is after learning from certain number of examples, can we recognize something, a new example, a new sample?

And I’ve tried every single algorithm under the sun from Bayes net to support vector machines to neural network. And the missing piece that my student and I realized is that data is missing. If you look at the evolution or development of intelligent animals like humans, we were inundated with data in the early years of development, but our machines were starved with data.

So we decided to do something crazy at that time to create an internet scale data set over the course of three years called ImageNet that included fifteen million images hand curated by people around the world across twenty-two thousand categories. So for me, the moment at that point is big data drives machine learning. And it’s now the limiting factor, the building block of all of the algorithms that we’re seeing.

Madhumita Murgia: Yeah, it’s part of the scaling law of today’s AI.

Fei-Fei Li: And the second moment is 2018, I was the first chief scientist of AI at Google Cloud. Part of the work we do is serving all vertical industries under the sun, right? From healthcare to financial services, from entertainment to manufacturing, from agriculture to energy.

And that was a few years after the, what we call the ImageNet moment, a couple of years after AlphaGo.

Madhumita Murgia: And I realized…

Fei-Fei Li: AlphaGo being the algorithm that was able to beat humans at playing the Chinese board game.

Madhumita Murgia: Yes.

Fei-Fei Li: And as the chief scientist at Google, I realized this is a civilizational technology that’s going to impact every single human individual as well as sector of business. And if humanity is going to enter an AI era, what is the guiding framework so that we not only innovate, but we also bring benevolence through this powerful technology to everybody.

And that’s when I returned to Stanford as a professor to co-found the Human-Centered AI Institute and propose the Human-Centered AI framework so that we can keep humanity and human values in the center of this technology.

Madhumita Murgia: So developing but also looking at the impact and what’s next, which is where the rest of us come in.

# Yann LeCun on Self-Organizing Intelligence

Madhumita Murgia: Yann, do you want round us out here? What’s been your highlights?

Yann LeCun: Yeah. I’ll probably go back a long time. I realized when I was an undergrad, I was fascinated by the question of AI and intelligence more generally and discovered that people in the ’50s and ’60s that worked on training machines instead of programming them, I was really fascinated by this idea, probably because I thought I was either too stupid or too lazy to actually build an intelligent machine from scratch, right?

So it’s better to let itself be train itself or self organize, and that’s the way intelligence in life builds itself. It’s self organized. So I thought this concept was really fascinating, and I couldn’t find anybody when I graduated from engineering. I was doing chip design, by the way. I to go to grad school. I couldn’t find anybody who was working on this but connected with some people who kind of were interested in this and discovered Jeff’s papers, for example.

And he was the person in the world I wanted to meet most, 1983 when I started grad school, and we eventually met two years later.

Madhumita Murgia: And today, you’re friends, would you say?

Yann LeCun: Yes. We had lunch together in 1985, and we could finish each other’s sentences, basically. I had a paper written in French at a conference where he was a keynote speaker and managed to actually kind of decipher the math. It was kind of like back propagation a little bit to train multilayer nets.

It was known from the ’60s that the limitation of machine learning was due to the fact that we could not train machines with multiple layers. So that was really my obsession, and it was his obsession, too. So I had a paper that kind of proposed some way of doing it, and he kind of managed to read the math. So that’s how we hooked up.

Madhumita Murgia: And that’s what has set you on this path.

The Evolution of Learning Paradigms

Yann LeCun: So and then after that, you know, once you can train complex systems like this, you ask these other questions. So how do I build them? So they do something useful like recognizing images or things of that type.

And at the time, Jeff and I had this debate when I was a postdoc with him in the late ’80s. I thought the only machine learning paradigm that was well formulated was supervised learning. You show an image to the machine, and you tell it what the answer is.

And he said, “No, no, no. The only way we’re going to make progress is unsupervised learning,” and I was kind of dismissing this at the time. And what happened the mid-2000s when Yoshua and I sort of start getting together and we start the interest of community in deep learning, we actually kind of made our bet on unsupervised learning or self-supervised.

Madhumita Murgia: This is just a reinforcement loop, right?

Yann LeCun: This is not reinforcement. No, this is basically discovering the structure in data without training the machine to do any particular task, which is, by the way, the way LLMs are trained. So an LLM is trained to predict the next word, but it’s not really a task. It’s just a way for the system to learn a good kind of representation or capture the instruction. Is there no real system there?

# The Future of AI: Beyond LLMs and Current Paradigms

Madhumita Murgia: Sorry to get geeky, is there nothing to say this is correct and therefore keep doing it?

Geoffrey Hinton: Well, this is correct if you predict the next word correctly. And so that’s different from the rewards in reinforcement learning where you say that’s good.

Madhumita Murgia: Okay. So in fact, I’m going to blame it on you. It was that Fei-Fei produced this big dataset called ImageNet which was labeled, and so we could use supervised learning to train the systems on. And that’s a network actually much better than we expected. And so we temporarily abandoned the whole program of working on self-supervised and unsupervised learning because supervising was working so well.

Geoffrey Hinton: I didn’t figure out a few tricks. Yoshua stuck with it. I didn’t.

Yann LeCun: No, you didn’t. But it kind of refocused the entire industry and the research community, if you want, on sort of deep learning, supervised learning, et cetera. And it took another few years, maybe around 2016, 2017, to tell people, like, this is not going to take us where we want. We need to do self-supervised learning. And that’s why, and then, really, are the best example of this.

But what we’re working on now is applying this to other types of data like video sensor data, which other agents are really not very good at, at all. And that’s a new challenge for the next few years.

The Present Moment: Hype or Reality?

Madhumita Murgia: So that brings us actually to the present moment. And I think you’ll all have seen this crest of interest from people who had no idea what AI was before, who had no interest in it, and now everybody’s flocking to this. And this has become more than a technical innovation, right? There’s a huge business boom. It’s become a geopolitical strategy issue. And everybody’s trying to get their hands around what this is or their heads around it.

Jensen, I’ll come to you here first. I want you all to reflect on this moment now here. NVIDIA in particular has its name in the news every day, hour, week, and you have become the most valuable company in the world. So there’s something there that people want. Tell us about, are you worried that we are getting to the point where people don’t quite understand and we’re all getting ahead of ourselves and there’s going to be a reckoning that there’s a bubble that’s going to burst and then it will right itself?

And if not, what is the kind of biggest misconception about demand coming from AI that is different to, say, the dot-com era or that people don’t understand if that’s not the case?

Jensen Huang: During the dot-com era, during the bubble, the vast majority of the fiber deployed were dark, meaning the industry deployed a lot more fiber than it needed. Today, almost every GPU you could find is lit up and used. And so the reason why, I think it’s important to take a step back and understand what AI is.

For a lot of people, AI is ChatGPT and it’s image generation, and that’s all true. That’s one of the applications of it. And AI has advanced tremendously in the last several years. The ability to not just memorize and generalize, but to reason and effectively think and ground itself through research, it’s able to produce answers and do things that are much more valuable now. It’s much more effective.

And the number of companies that are able to build businesses that are helpful to other businesses, for example, a software programming company, an AI software company that we use called Cursor, they’re very profitable and we use their software tremendously. And it’s incredibly useful. Or Abridge or OpenEvidence who are serving the healthcare industry doing very, very well, producing really good results. And so the AI capability has grown so much.

And as a result, we’re seeing these two exponentials that are happening at the same time. On the one hand, the amount of computation necessary to produce an answer has grown tremendously. On the other hand, the amount of usage of these AI models are growing also exponentially. These two exponentials are causing a lot of demand on compute.

AI Factories: A New Industrial Paradigm

Now, when you take a step back, you ask yourself fundamentally what’s different between AI today and the software industry of the past. Well, software in the past was precompiled, and the amount of computation necessary for the software is not very high. But in order for AI to be effective, it has to be contextually aware. It can only produce the intelligence at the moment. You can’t produce it in advance and retrieve it. That’s called content.

AI intelligence has to be produced and generated in real time. And so as a result, we now have an industry where the computation necessary to produce something that’s really valuable and high demand is quite substantial. We have created an industry that requires factories. That’s why I remind ourselves that AI needs factories to produce these tokens, to produce the intelligence.

And this is a once in a, it’s never happened before, where the computer is actually part of a factory. And so we need hundreds of billions of dollars of these factories in order to serve the trillions of dollars of industries that sits on top of intelligence.

You come back and take a look at software in the past. Software in the past, they’re software tools. They’re used by people. For the first time, AI is intelligence that augments people. And so it addresses labor. It addresses work. It does work.

Madhumita Murgia: So you’re saying, no, this is not a bubble.

Jensen Huang: I think we’re well in the beginning of the build out of intelligence. And the fact of the matter is most people still don’t use AI today. And someday in the near future, almost everything we do, every moment of the day, you’re going to be engaging AI somehow. And so between where we are today, where the use is quite low, to where we will be someday, where the use is just basically continuous, that build out is what we’re experiencing.

Madhumita Murgia: And even if the LLM runway runs out, you think GPUs and the infrastructure you’re building can still be of use in a different paradigm?

Jensen Huang: LLM is a piece of the AI technology. AIs are systems of models, not just LLMs and LLMs are a big part of it, but they’re systems of models. The technology necessary for AI to be much more productive from where it is today, irrespective of what we call it, we still have a lot of technology to develop yet.

Beyond Language Models: The Evolution of AI

Madhumita Murgia: Who wants to jump in on this, especially if you disagree?

Yoshua Bengio: I don’t think we should call them LLMs anymore. They’re not language models anymore. They start as language models, at least that’s the pre-training, but more recently there’s been a lot of advances in making them agents. In other words, go through a sequence of steps in order to achieve something interactively with an environment, with people right now through a dialogue, but more and more with a computing infrastructure.

And the technology is changing. It’s not at all the same thing as what it was three years ago. I don’t think we can predict where the technology will be in two years, five years, ten years. But we can see the trends. One of the things I’m doing is trying to bring together a group of international experts to keep track of what’s happening with AI, where it is going, what are the risks, how are they being mitigated.

And the trends are very clear across so many benchmarks. Now, because we’ve had so much success in improving the technology in the past, it doesn’t mean that’s going to be the same in the future. So then there would be financial consequences if the expectations are not met. But in the long run, I completely agree.

Madhumita Murgia: But currently, what about the rest of you? Do you think that the valuations are justified in terms of what you know about the technology, the applications?

Bill Dally: I think there are three trends that sort of explain what’s going on. The first is the models are getting more efficient. If you look just at attention, for example, going from straight attention to GQA to MLA, you get the same or better results with far less computation. And so that then drives demand in ways where things that may have been too expensive before become inexpensive that now you can do more with AI.

At the same time, the models are getting better. And maybe they’ll continue to get better with transformers and maybe a new architecture will come along, but we won’t go backwards. We’re going to continue to have better models.

Madhumita Murgia: That still means GPUs, even if they don’t have that transformer architecture?

Bill Dally: Absolutely. In fact, it makes them much more valuable compared to more specialized things because they’re more flexible and they can evolve with the models better. But then the final thing is I think we’ve just begun to scratch the surface on applications.

So almost every aspect of human life can be made better by having AI assist somebody in their profession, help them in their daily lives. And I think we’ve started to reach maybe one percent of the ultimate demand for this. So as that expands, the number of uses of this are going to go up. So I don’t think there’s any bubble here. I think we’re, like Jensen said, we’re riding a multiple exponential and we’re at the very beginning of it and it’s going to just keep going.

Madhumita Murgia: And in some ways NVIDIA is insured to that because even if this paradigm changes and there’s other types of AI and other architectures, you’re still going to need the atoms. So that makes sense for you. Did you want to jump in, Fei-Fei?

New Frontiers: Spatial Intelligence and Beyond

Fei-Fei Li: Yes, I do think that, of course, from a market point of view, it will have its own dynamics and sometimes it does adjust itself. But if you look at the long term trend, let’s not forget AI, by and large, is still a very young field, right? We walk into this room and on the wall, there were equations of physics. Physics has been a more than four hundred year old discipline, even if we look at modern physics. AI is less than seventy years old. If we go back to Alan Turing, that’s about seventy-five years. So there is a lot more new frontiers that is to come.

Jensen and Yoshua talk about LLMs and agents, those are more language based. But even if you do self-introspection of human intelligence, there’s more intelligent capabilities beyond language. I have been working on spatial intelligence, which is really the combination or the linchpin between perception and action where humans and animals have incredible ability to perceive, reason, interact with and create worlds that goes far beyond language.

And even today’s most powerful language based or LLM based models fail at rudimentary spatial intelligence tests. So from that point of view, as a discipline, as a science, there’s far more frontiers to conquer and to open up. That brings the applications, opens up more applications.

Madhumita Murgia: And you work at a company. And so you have the kind of dual perspective of being a researcher and working in a commercial space. Do you agree? Do you believe that this is all justified and you can see where this is all coming from? Or do you think we’re reaching an end here and we need to find a new path?

Yann LeCun: So I think there are several points of view for which we’re not in a bubble. And at least one point of view suggesting that we are in a bubble, but it’s totally different.

So we’re not in a bubble in a sense that there are a lot of applications to develop based on LLMs. LLM is the current dominant paradigm, and there’s a lot to milk there. This is what Bill was saying, to kind of help people in their daily lives with current technology, that technology needs to be pushed, and that justifies all the investment that is done on the software side and also on the infrastructure side.

Once we have smart wearable devices in everybody’s hands, assisting them in their daily lives, the amount of computation that would be required, as Jensen was saying, to serve all those people is going to be enormous. So in that sense, the investment is not wasted.

But there is a sense in which there is a bubble, and it’s the idea somehow that the current paradigm of LLM will be pushed to the point of having human level intelligence, which I personally don’t believe in and you don’t either. And we need kind of a few breakthroughs before we get to machines that really have the kind of intelligence we observe, not just in humans, but also animals. We don’t have robots that are nearly as smart as a cat, right?

And so we’re missing something big still, which is why AI progress is not just a question of more infrastructure, more data, more investment and more development of the current paradigm. It’s actually a scientific question of how do we make progress towards the next generation of AI.

The Path to Human-Level Intelligence

Madhumita Murgia: Which is why all of you are here, right? Because you actually sparked the entire thing off. And I feel like we’re moving much towards the engineering application side. But what you’re saying is we need to come back to what brought you here originally.

On that question of human level intelligence, we don’t have long left. I just want to do a quick fire, I’m curious. Can each of you say how long you think it will take until we do reach that point where you believe we’re equivalent machine intelligence to a human or even a clever animal like an octopus or whatever? How far away are we just the years?

Yoshua Bengio: It’s not going to be an event.

Madhumita Murgia: Okay.

Yoshua Bengio: Because the capabilities are going to expand progressively in various domains.

Madhumita Murgia: Over what time period?

Yoshua Bengio: Over maybe we’ll make some significant progress over the next five to ten years to come up with a new paradigm. And then maybe progress will come. But it will take longer than we think. Parts of machines will supersede human intelligence and part of the machine intelligence will never be similar or the same as human intelligence. They are built for different purposes and they will—

Madhumita Murgia: When do we get to superseding?

The Question of AGI and Human Intelligence

Part of it is already here. How many of us can recognize twenty-two thousand objects in the world? Do you not think an adult human can recognize twenty-two thousand objects? The kind of granularity and fidelity, no. How many adult humans can translate a hundred languages?

That’s harder, yeah. So I think we should be nuanced and grounded in scientific facts that just like airplanes fly, but they don’t fly like birds. And machine-based intelligence will do a lot of powerful things, but there is a profound place for human intelligence to always be critical in our human society.

Jensen Huang: We have enough general intelligence to translate the technology to enormous amount of society useful applications in the next coming years. And with respect to—

Madhumita Murgia: In the coming year?

Jensen Huang: Yeah, yeah, yeah, we’re doing it today. And so I think one, we’re already there. And two, the other part of the answer is it doesn’t matter. Because at this point, it’s a bit of an academic question.

We’re going to apply the technology to, and the technology is going to keep on getting better. And we’re going to apply the technology to solve a lot of very important things from this point forward. And so I think the answer is it doesn’t matter.

Madhumita Murgia: And it’s now as well?

Jensen Huang: Yeah. You decide. Right?

Timeline Predictions and Capabilities

Geoffrey Hinton: If you refine the question a bit to say how long before if you have a debate with this machine, it’ll always win. I think that’s definitely coming within twenty years. We’re not there yet, but I think fairly definitely within twenty years we’ll have that. So if you define that as AGI, it’ll always win a debate with you. We’re going to get there in less than twenty years probably.

Madhumita Murgia: Do you have any questions?

Bill Dally: I’m sorry with Jensen that it’s the wrong question. Our goal is not to build AI to replace humans or to be better than humans. Our goal is to augment humans. So what we want to do is complement what humans are good at.

Humans can’t recognize twenty-two thousand categories or most of us can’t solve these math olympiad problems. So we build AI to do that. So humans can do what is uniquely human, which is be creative and be empathetic and understand how to interact with other people in our world. And I think that it’s not clear to me that AI will ever do that, but AI can be huge assistance to humans.

The Path to Human-Level AI

Yann LeCun: So I’ll beg to differ on this. I don’t see any reason why at some point we wouldn’t be able to build machines that can do pretty much everything we can do. Of course, for now on the spatial and robotic side, it’s lagging. But there’s no conceptual reason why we couldn’t.

On the timeline, I think there’s a lot of uncertainty and that we should plan accordingly. But there is some data that I find interesting where we see the capability of AIs to plan over different horizons to grow exponentially fast in the last six years. And if we continue that trend, it would place roughly the level that an employee has in their job to AI being able to do it within about five years.

Now, this is only one category of engineering tasks and there are many other things that matter. For example, one thing that can change the game is that many companies are aiming to focus on the ability of AI to do AI research. In other words, to do engineering, to do computer science and to design the next generation of AI, including maybe improving robotics and spatial understanding.

So I’m not saying it will happen, but the area of ability of AI to do better and better programming and understanding of algorithms, that is going very, very fast. And that could unlock many other things. We don’t know. And we should be really agnostic and not make big claims because there’s a lot of possible futures there.

Closing Remarks

Madhumita Murgia: So our consensus is in some ways we think that future is here today, but there’s never going to be one moment. And the job of you all here today is help to guide us along this route until we get to a point where we’re working alongside these systems.

Very excited personally to see where we’re going to go with this. If we do this again in a year, it’ll be a different world. But thank you so much for joining us, for sharing your stories and for talking us through this huge kind of revolutionary moment. Thank you.

All: Thank you. Thank you.

Related Posts